Why is this AI’s big health care moment?

In Canada, the volume of available health data is exploding. Growing adoption of electronic health records and electronic medical records, as well as the proliferation of smart devices, are becoming the lifeblood of AI solutions. The International Data Corporation (IDC) estimates that by 2025, the compound annual growth rate for health care data will be 36%.¹

This rapid and large increase in the availability of health care data is enriching our ability to train complex AI models and generate new insights and solutions.

This is compounded by the fact that data storage and integration technology has become increasingly sophisticated. Historically, the Canadian health care sector and AI developers have faced challenges in unifying and integrating data across disparate sources to drive machine learning (ML) solutions, including electronic health records, picture archiving and communication systems, lab solutions, medical devices and more.

Not anymore.

Data integration solutions now allow us to reduce data silos and stitch patient data together to develop novel AI solutions. For example, data fabric uses metadata — that is, data about the data — to unify, harmonize and govern structured and unstructured health care data into a common architecture.

Put those developments into broader context, and opportunities to extend AI’s influence across the sector only grows. A proliferation of accessible solutions for citizen AI means low- and no-code solutions are now available, bringing the power of AI within reach for users at all skill levels, both technical and non-technical. This shift is also enabling the permeance of AI across health care organizations, from researchers to executives, and furthering the shift to preventative health.

In the post-COVID era, when Canadians are eager to adopt AI into society, all of this bodes well for leverging AI in health care processes. Research shows 38% of Canadians say they’ve personally been in contact with or used an AI application. What’s more, some 36% of Canadians have used ChatGPT.² Meanwhile, researchers have already found that ChatGPT’s responses to patient questions posted to a social media forum were rated significantly higher quality and more empathetic than real physicians.³ However, organizations should consider the limitations and risks of use of such technology, for example, some of the GPT systems’ knowledge base is at least a year old, and so more recent information on treatment plans may not be available.

This increasing openness to AI is good news for advancing solutions in Canadian health care. The aggregation of enhanced data storage and integration technology, coupled with advances in AI and Canadians’ willingness to adopt AI means that the Canadian health care sector can deploy AI in a profound way to impact health outcomes, as well as the patients and practitioners who bring the system to life.

How can AI positively impact Canadian health care?

Across the sector, health care organizations are already integrating AI solutions into clinical processes.

Unity Health Toronto is running more than 30 AI models daily, including its highly successful CHARTWatch algorithm, which identifies patients at high risk for clinical deterioration — such as patient death or ICU admission — and flags them for medical team intervention. The final evaluation of this tool has identified a 20% reduction in mortality on the medical ward where the model’s been deployed, leading to a significant drop in mortality among patients.

Also in Toronto, the University Health Network has launched an AI Hub. This collaborative centre was designed to augment human intelligence by continuously advancing AI technologies and accelerating implementation to deliver better patient outcomes and enhance clinical workflows. Since September 2023, the Hub has generated innovative solutions like Surgical Go-No-Go, which uses computer vision to provide surgeons with real-time guidance and navigation during operations. This solution helps avoid complications and optimize surgical outcomes.

In another example, newly developed Medly, a digital therapeutic platform for heart failure management, is now reducing re-hospitalizations by 50%. The algorithm has been enhanced by AI to improve its ability to detect decompensation more effectively in patients at home, allowing the hospital to intervene sooner.

A partnership between the Vector Institute for Artificial Intelligence and Unity Health led to the development of GEMINI — a rich, centralized resource with anonymized patient data from over 30 Ontario hospitals, that has been standardized and optimized for AI and ML discovery. Vector researchers are using this data to conduct innovative research studies, including the development of cutting-edge, privacy-enhancing technology. The Institute has also partnered with Kids Help Phone, using natural language processing (NLP) to adapt to the way young people speak, empowering frontline staff to offer more precise services and resources based on words, phrases and speech patterns.

Similarly exciting examples are emerging at Toronto’s Hospital for Sick Children (SickKids). The hospital has established the AI in Medicine for Kids (AIM) program to build targeted AI solutions that improve outcomes and delivery of care for children.

Collectively, these examples — and more like them taking shape nationwide — open the gateway to ever more possibilities. That said, health care providers, agencies and ministries looking to embark on the AI journey have a lot to consider.

Where should Canadian health care organizations focus to safely deploy AI solutions at scale?

Canada’s health care organizations should kickstart AI deployment by concentrating on six key areas. This can help establish a strong data foundation — a precursor to developing and deploying AI solutions — and then help create a broad AI strategy that connects use cases and development with an operating model to scale AI across the organization.

1. Keep regulation and ethical AI development at the forefront

Health care organizations are no strangers to regulation for patient information and data protection, such as PHIPA. To make the most of AI, organizations must also establish a proactive approach to generating trust in AI. While AI can enhance clinical efficiency and outcomes, it may also come with reduced accuracy of predictions for minority populations and enable unequal access to care.⁴

Organizations should proactively adopt and implement practices to engender trust in AI from providers and patients. Consult credible sources like The Vector Institute’s Health AI Implementation Toolkit or the Government of Canada’s guidelines to understand and prioritize ethical AI.

While it is important to continue to stay compliant with applicable regulations, as the regulatory environment continues to evolve, Canadian healthcare organizations may also look to international standards and regulations to build their ethical AI development practices such as ISO 42001 AI Management System Standard or the NIST AI Risk Management Framework.

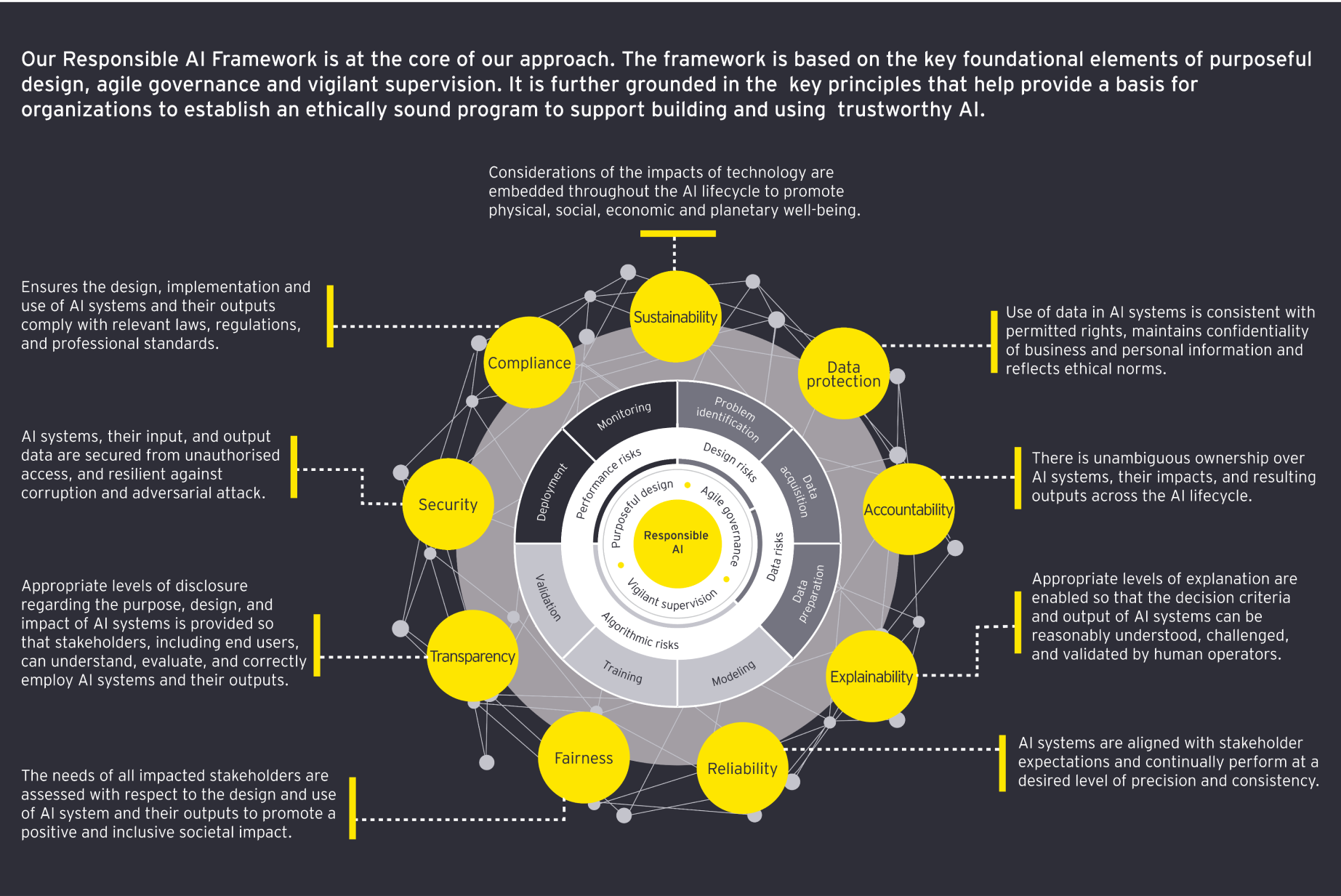

Organizations across the globe are developing their own responsible AI framework that enable their thinking around the responsible development, deployment and use of AI. For example, EY has its own Responsible AI Framework, which is grounded in the key pillars of purposeful design, agile governance and vigilant supervision over the processes and risks throughout the lifecycle of AI development and use.

EY’s Responsible AI Framework is enabled through its nine principles:

- Accountability

- Explainability

- Reliability

- Fairness

- Transparency

- Security

- Compliance

- Sustainability

- Data protection