EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

The key issues boards need to consider when implementing artificial intelligence technology.

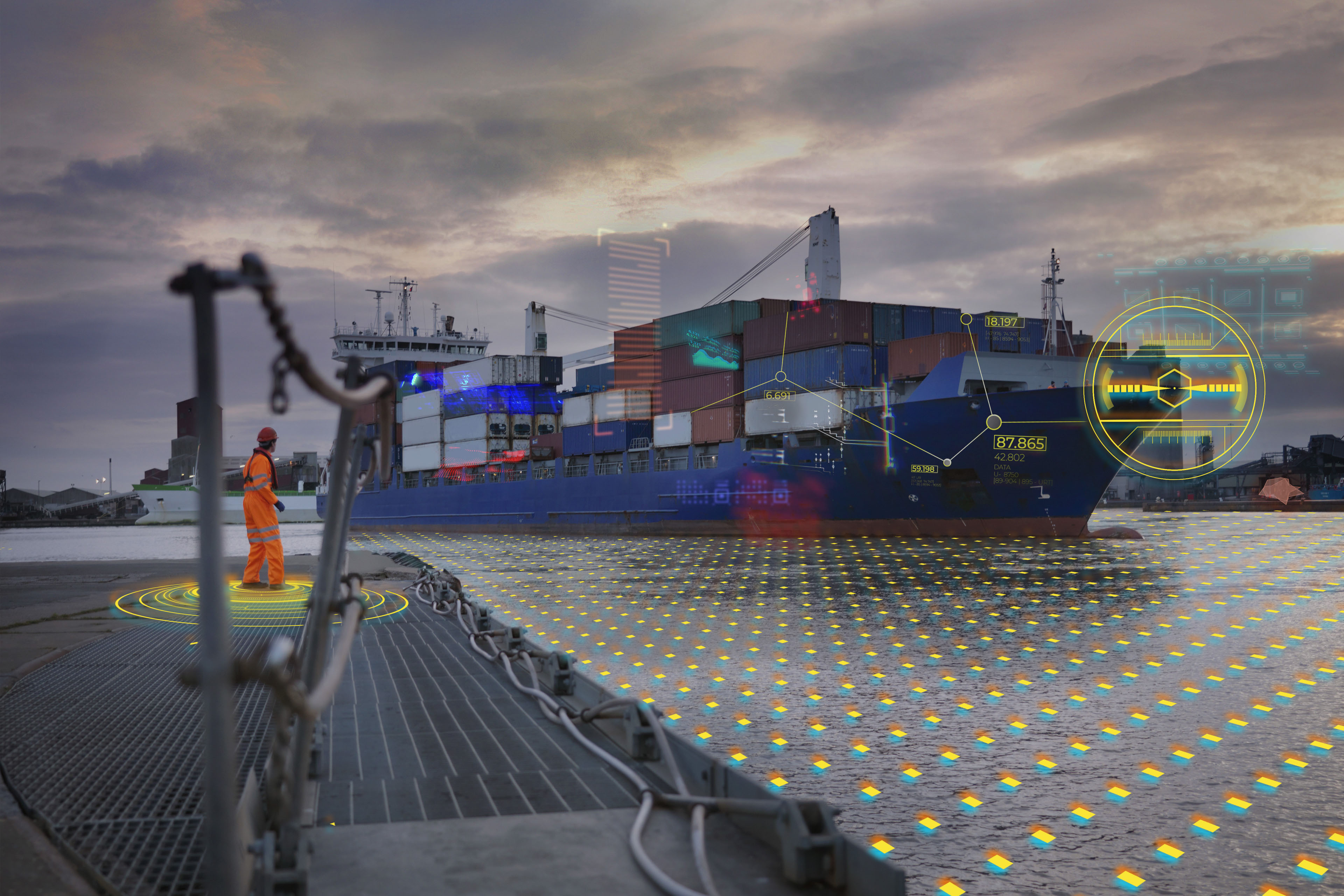

AI can enhance complex decision-making processes, which is why it is a catalyst for transformation in every industry. It allows onerous and time-consuming tasks to be completed more efficiently and effectively, and can give management teams a depth of insight that was never available before.

Machine learning – a form of AI where computer algorithms improve over time through their experience of using data – plays an increasingly prominent role in enterprise risk management. AI can be used to create sophisticated tools to monitor and analyze behavior and activities in real time. Since these systems can adapt to changing risk environments, they continually enhance the organization’s monitoring capabilities in areas such as regulatory compliance and corporate governance. They can also evolve from early warning systems into early learning systems that prevent threats materializing for real.

Risk mitigation

While AI is still developing, it can already be used to mitigate risk in some key areas. For example, machine learning can support more informed predictions about the likelihood of an individual or organization defaulting on a loan or a payment, and it can be used to build variable revenue forecasting models.

For many years, machine learning has successfully detected credit card fraud. Banks use systems that have been trained on historical payments data to monitor payments for potential fraudulent activity and block suspicious transactions. Financial institutions also use automated systems to monitor their traders by linking trading information with other behavioral information such as email traffic, calendar items, office building check-in and check-out times, and even telephone calls.

AI-based analytics platforms can manage supplier risk by integrating a host of different information about suppliers, from their geographical and geopolitical environments through to their financial risk, sustainability and corporate social responsibility scores.

Finally, AI systems can be trained to detect, monitor and repel cyber attacks. They identify software with certain distinguishing features – for example, a tendency to consume a large amount of processing power or transmit a lot of data – and then close down the attack.

Risks related to AI adoption

Despite these benefits, AI is also a source of significant new risks that must be managed. So it is important that the risks are identified that relate to each individual AI application and to each business unit that uses it.

Some of the main risks associated with AI include:

- Algorithmic bias: Machine-learning algorithms identify patterns in data and codify them in predictions, rules and decisions. If those patterns reflect some existing bias, the algorithms are likely to amplify that bias and may produce outcomes that reinforce existing patterns of discrimination.

- Overestimating the capabilities of AI: Since AI systems do not understand the tasks they perform, and rely on their training data, they are far from infallible. The reliability of their outcomes can be jeopardized if the input data is biased, incomplete or of poor quality.

- Programmatic errors: Where errors exist, algorithms may not perform as expected and may deliver misleading results that have serious consequences.

- Risk of cyber attacks: Hackers who want to steal personal data or confidential information about a company are increasingly likely to target AI systems.

- Legal risks and liabilities: At present, there is little legislation governing AI, but that is set to change. Systems that analyze large volumes of consumer data may not comply with existing and imminent data privacy regulations, especially the EU’s General Data Protection Regulation.

- Reputational risks: AI systems handle large amounts of sensitive data and make critical decisions about individuals in a range of areas including credit, education, employment and health care. So any system that is biased, error-prone, hacked or used for unethical purposes poses significant reputational risks to the organization that owns it.

What boards need to know

Boards should understand how AI technologies are being applied within the organization and externally. They should ensure the organization has appropriate structures in place to manage ethical issues and understand how it is addressing algorithmic bias.

They also need to be aware of emerging frameworks, policies and legislation to ensure that their business has the right balance between algorithmic transparency and accountability. Finally, boards should feel confident in the robustness of their “black box” – the term used to describe a machine learning system. This can be achieved through a thorough review that determines whether the outputs from the system are as expected and whether proper controls exist to monitor these systems as they evolve over time.

The key questions for boards to consider are:

- Does the board understand the potential impact of AI on the organization’s business model, culture, strategy and sector?

- How is the board challenging management to respond strategically to both the opportunities presented by AI and the risks associated with it?

- How is the organization using AI technology and new data sets for governance and risk management? How are the dashboards of the board and the audit committee changing?

- Does the organization have a talent strategy for recruiting and retaining people with the necessary skillsets to manage and staff AI-related projects?

- Has the board asked management to assess how the adoption of AI impacts the integrity of its finance function or its financial statements?

The views of third parties set out in this article are not necessarily the views of the global EY organization or its member firms. Moreover, they should be seen in the context of the time they were made.

Summary

Artificial intelligence (AI) presents opportunities when applied well and risks when applied badly. EY’s Jeanne Boillet looks at both sides of the coin and highlights aspects of AI that company boards need to pay close attention to.