AI should engender not endanger an individual’s autonomy, defend not desecrate human rights and promote rather than imperil social well-being.

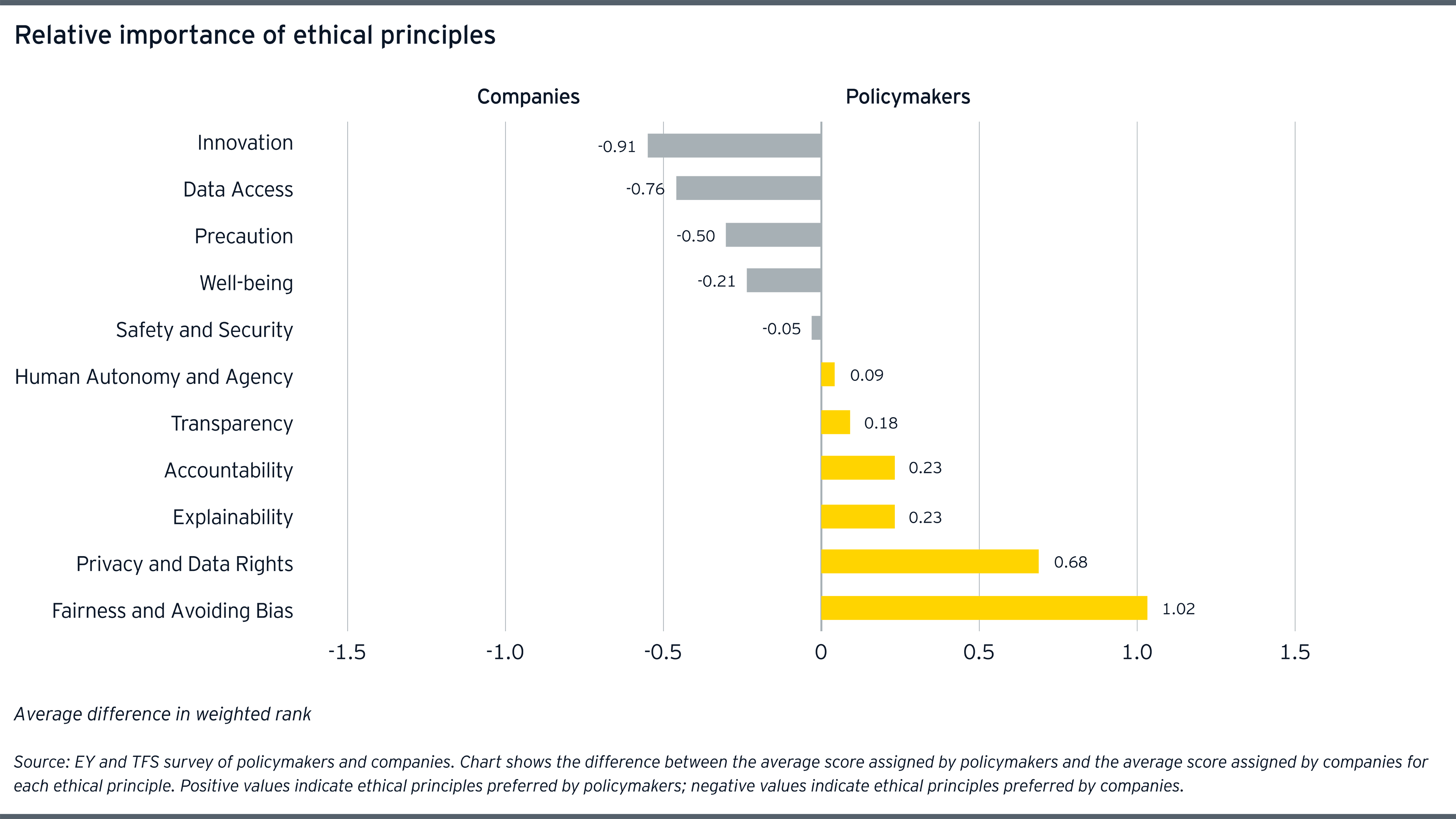

Significant misalignments around fairness and avoiding bias generate substantial market risks, as companies may be deploying products and services poorly aligned to emerging social values and regulatory guidance. To illustrate, the recent social upheaval in the US amid the pandemic demonstrates intense public anger directed against discrimination. Brands deploying biased algorithms risk bearing that wrath.

The second-largest gap was around the principle of innovation, which industry prioritized more strongly in all 12 use cases when compared with policymakers. While perhaps unsurprising, innovating with AI is inextricably linked to the risks created and the risks vary depending on the use case. If personal data is analyzed to build a new product, privacy is usually given up (e.g. “training data”) before the algorithm gets released. If an AI inappropriately modifies someone’s behavior, they may not be able to regain any lost autonomy.

Companies’ stronger relative emphasis on the principle of innovation indicates they may not be fully assessing ethical risks when embarking on transformation projects or during the research and development of AI products and services. This finding is supported by the recent EY global board risk survey which shows just 39% of companies believe their enterprise risk practices adequately manage non-traditional or emergent risks, such as those created by building and deploying new AI applications. Improvements in risk management techniques are needed to balance these risks with the significant benefits offered by AI.

The COVID-19 pandemic throws the third- and fourth-largest areas of divergence in our survey into particularly stark relief; inevitably, there is a trade-off between data access on the one hand, and privacy and data rights on the other. Data is a fundamental input into the production of innovative and new AI algorithms. Training an AI to scan human lung tissue requires people to submit medical records. Identifying clusters to enable track-and-trace means people must share some private location data and contact information.

Related article

Policymakers surveyed place privacy and data rights as a primary ethical consideration in the development of AI while many industry professionals, instead, emphasize data access. The misuse of personal data has been a key driver of the recent backlash against technology companies and the pandemic may be aggravating those concerns as invasive applications proliferate. Where a recent survey shows 53% of consumers are willing to share personal data to help monitor and track COVID-19, those preferences may not translate more broadly or continue once the immediate crisis has subsided.

Abrogating principles of privacy and fairness, whether intentional or by accident, imposes significant costs on people. Discriminatory algorithms may further threaten some of society’s most vulnerable populations, including ethnic minorities and women — many of who are disproportionately impacted by the pandemic and related economic fallout. Privacy risks are perhaps most acute for children, who lack the experience needed to protect it themselves, yet are now spending considerably more time online to continue distance learning amid widespread school closures. Policymaker consensus around the principles of privacy and fairness suggests resolving conflicting company ethical priorities is critical to soothe public concerns.

Transformation planning thus needs to consider emerging ethical risks. Trust in new technologies will vary depending on local cultural and political conditions, as well as regulatory and governance regimes. For example, the principle of “purpose limitation” is enshrined in Europe’s General Data Protection Regulation. This rule restricts how personal data collected for one use can be reused for other applications in the future and may encourage greater acceptance of invasive data sharing than in places lacking such restrictions. While responding to the pandemic is an urgent need, gaining efficiency at the expense of weakened freedoms and human rights will not build an enduring public trust. The current low-trust environment translates into weaker adoption, in turn, undermining the vast potential of AI-powered public health applications.

Related article

The expectations gap

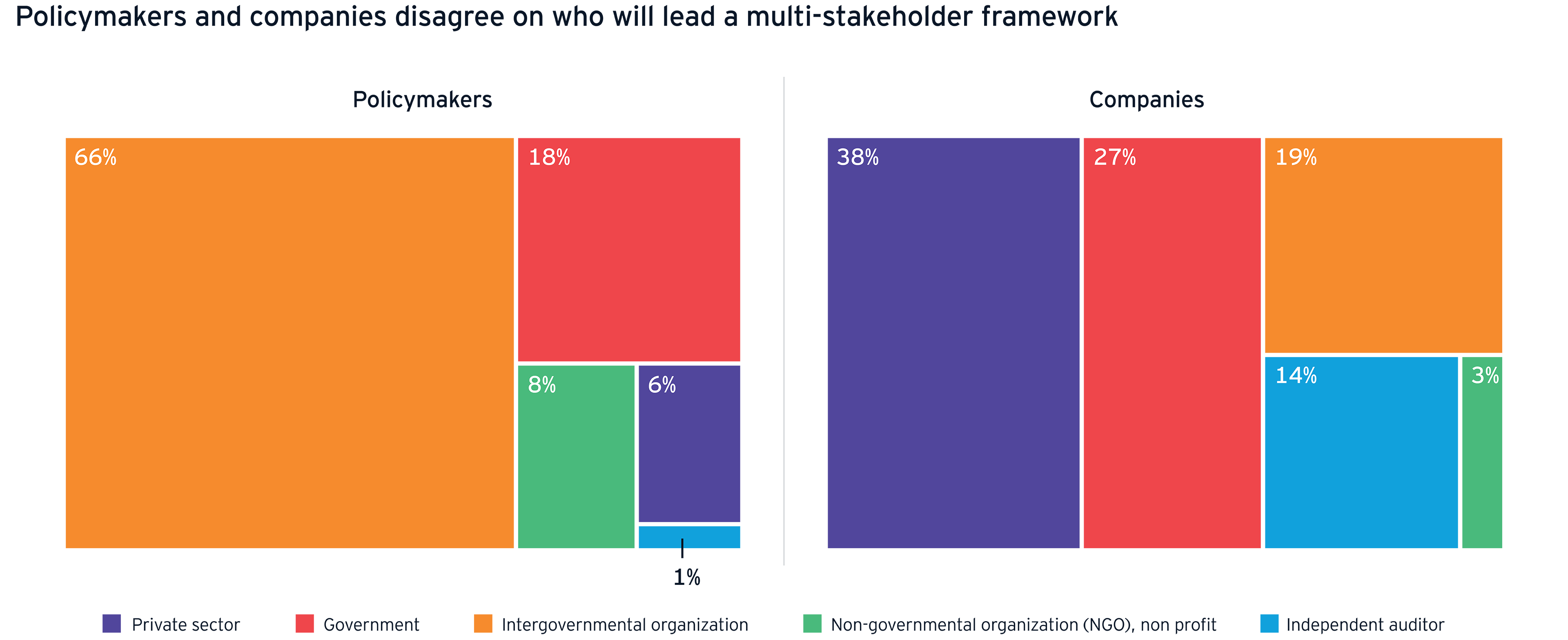

In addition to ethical misalignments, the survey reveals a large expectations gap. The industry has promised self-regulation for some time, but verifiable progress on the key challenges facing the technology sector, such as disinformation and privacy abuses, remains lacking. Thirty-eight percent of companies continue to believe they will lead any rule-making process. But 84% of policymakers expect state actors to dominate. Such a large divergence in expectations is concerning and indicates some companies will be surprised by the direction and scope of upcoming legislative changes. Legal and compliance risks ensue when the industry isn’t well aligned with policymakers. These risks are heightened when products are rushed to market to meet emergency requirements or when multi-year transformation plans are implemented in a matter of weeks.

With the deliberative phase of AI regulation coming to an end, policymakers are now clearly moving beyond principles and toward practice. The COVID-19 pandemic has not slowed this legislative agenda, rather it is providing fresh impetus as AI-powered public health applications multiply. This means the window allowing companies to voluntarily align with the emerging governance framework is closing quickly. Business-as-normal risks a larger adjustment burden when stricter governance arrives soon.

Mitigating these risks before they materialize requires leadership commitment to building trust; AI applications deployed must reflect company values. AI should engender not endanger an individual’s autonomy, defend not desecrate human rights and promote rather than imperil social well-being.

Embracing ethical AI

As firms realize the benefits of AI by bringing more products and services to the market, firmer governance will aid in risk management. Appropriate regulations can keep AI safe and secure without violating individuals’ privacy or fundamental freedoms. Better rules will keep businesses fully accountable for the results of their AI innovations. And stronger safeguards will help ensure Trusted AI deployed in the real world is fair, transparent and explainable. This will increase public trust and, therefore, consumer adoption, unlocking new products and strategies more quickly, and turbocharging the use of AI in transformation agendas.

The COVID-19 crisis puts ethical AI in focus, making it a central feature of any digital transformation strategy. The first step toward realigning with the emerging ethics is to foster an internal consensus around key principles across divisions, including technology, data, risk, compliance, legal, sales, HR and management. Engaging a broader set of stakeholders, such as customers who may be discriminated against by AI products and services, promotes trust in a volatile social climate. For example, to minimize the risk of algorithmic bias, businesses should actively build diverse and inclusive training data sets that fairly represent vulnerable groups. In the current social climate, the careless deployment of potentially discriminatory algorithms represents a substantial and growing brand risk. Companies might also consider validating their software with external audits of their production processes, data inputs and algorithmic outputs.

Transparency also helps build the trust needed to drive adoption. Companies should convert ethical principles into clear, published guidelines for supplying AI products and services. Lines of accountability should be formalized. Corporate policies and procedures are required to facilitate regular reviews and ongoing risk assessments, and update systems and products accordingly. Management must provide employees with the resources and training required to reinforce these crucial foundations and to continue learning as the environment evolves. Consulting with policymakers to understand how emerging ethical principles will influence AI regulatory developments in their sector helps ensure ongoing alignment.

Weak consumer trust risks slowing the adoption of transformational, even lifesaving, technologies. As the health crisis expands into a social and economic crisis, ethical AI is becoming a prerequisite to building a better working world.

Summary

A powerful consensus is emerging among policymakers regarding principles for the ethical application of AI. Governments are set to convert the ethics into enforceable rules and regulations, but industry and policymakers disagree on governance approaches and diverge on key principles. Poor alignment diminishes public trust in AI, slowing the adoption of critical applications. New risks are emerging as accelerated digital transformation plans encounter severe public health, social and economic conditions. Closing the AI trust gap is a top priority.