EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

Privacy is one of the main concerns regarding artificial intelligence, yet means to mitigate some of the privacy risks already exist.

The ICO has issued guidance on defining the legal basis for processing when collecting personal data for the development of artificial intelligence (AI).

Anonymization can sometimes be undone; however, there are additional means to protect personal data in AI, such as differential privacy and federated learning.

The outcomes of AI can be biased or even tampered with. The EU Artificial Intelligence Act regulates, among other things, the fairness, explainability and security of AI.

How to build confidence in AI: ensuring privacy with proper design

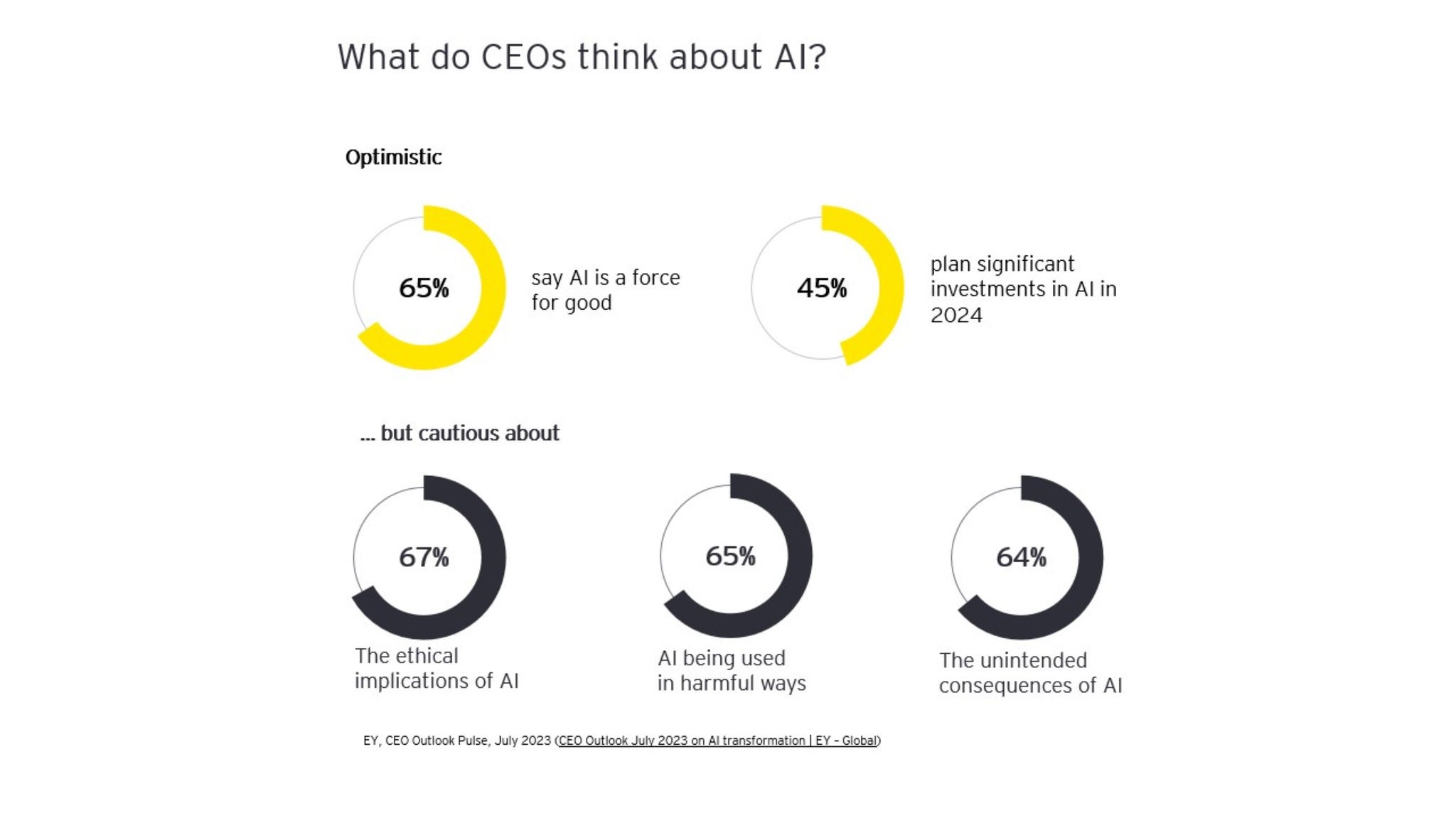

According to the EY CEO Outlook of July 2023, 65% of the CEOs surveyed believed that artificial intelligence (AI) is a force for good. However, the majority of respondents are concerned about the ethical implications and unintended consequences of AI, with privacy being their main concern.

Sixty-five percent of the CEOs believe that AI is a force for good but are concerned about its ethical implications and unintended consequences.

With current technological advancements in AI, new risks naturally emerge. However, we have long been familiar with terms such as “algorithm,” “automated decision-making” and “machine learning” (ML) through real-life applications like email spam filters and automated bank loan decisions. Thus, some of the challenges with AI are not entirely new but are amplified as AI spreads to new areas of life. The good news is that since AI did not develop overnight, some of its associated risks have been acknowledged for quite some time, and ways to mitigate privacy-related risks already exist.

In this text, I aim to share some thoughts and available online sources on protecting personal data in the context of AI. The text is divided into three main questions: whether personal data is lawfully collected for the development and use of AI, how personal data is protected, and how our rights are considered when AI makes its predictions or decisions.

Can we trust that our personal data is lawfully collected by companies using AI solutions?

Both the large language models (LLMs) and image creation technologies have been trained with massive amounts of content available online stirring debates over copyright law. When the data processed is personal, our privacy can also be violated. One of the most well-known cases involves the French authority Commission nationale de l’infomatique et des libertés (CNIL), which issued fines to a US-based facial recognition technology company for scraping photos of individuals online, including from social media platforms, and then selling rights to access this database to recognize a person when provided with a photo. Although the content scraped was made public by the data subjects themselves, people did not expect their photos to be used in this way. The French authority determined that there was no legal basis for Clearview AI’s actions and required the deletion of photos of any residents of France. When no evidence of compliance was provided by Clearview AI, a fine of over €5 million was issued. As data scraping becomes more of an issue, the Dutch Data Protection Authority, Autoriteit Persoonsgegevens, has recently issued guidelines for scraping personal data online (available only in Dutch).

It is possible to request data subject consent to obtain personal data for the specific purpose of developing an AI model. When data subject consent has not been requested, the GDPR allows secondary processing (i.e., processing personal data for purposes other than those for which it was originally collected) for research, development and innovation, when permitted by member state law. When neither consent nor national law is in place, secondary processing is allowed if the secondary purpose is compatible with the original purpose of processing and certain considerations are taken. The British Information Commissioner’s Office (ICO) has issued guidance on the most common privacy issues around AI and data protection, including defining the lawful basis according to the UK GDPR.

But wouldn’t it be easier to use synthetic data to train the model? While fake data does not have the same statistical qualities as real data, synthetic data is generated from a real database and can therefore be used to train and test an AI model without compromising real personal data. Gartner estimated that by 2024, 60% of all data used to train and test AI models would be synthetic. However, a recent studies indicate that when an AI model encounters a large amount of synthetic data, it may lead to model collapse. Given these findings, it will be interesting to see whether synthetic data will be as widely used as previously estimated.

In addition to the legal basis for collecting and processing personal data, other general personal data processing principles — such as data accuracy, data minimization, purpose limitation, data integrity and confidentiality apply to AI technology as well. The ICO has also issued a separate AI and Data Protection Toolkit with privacy controls for the entire AI lifecycle, addressing these topics in detail.

What could companies do to mitigate privacy risk and build customer trust?

Embed privacy by design principles throughout the AI lifecycle.

Ensure there is a valid legal basis for processing personal data when collecting data to train and test AI models.

Use synthetic data where possible. If personal data is required, minimize its use and be transparent about how it is used.

Conduct a data protection impact assessment and apply appropriate safeguards to mitigate perceived risks. Consult the data protection authority, if necessary, and be transparent about the use of personal data.

Use established frameworks such as the Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML) (ISO/IEC 23053:2022) to secure personal data.

Can we trust that personal data is processed securely?

An organization accidentally leaked sensitive data to OpenAI, leading to a company-wide ban on using generative AI tools in 2023. Moreover, dozens of terabytes of sensitive data was leaked to a public GitHub repository when another company was sharing their AI learning models. The risks associated with processing personal data can be significantly reduced by removing identifying information from datasets or collecting data in an aggregated form.

Pseudonymization or anonymization of personal data is a key security measure when developing AI. This can be achieved through methods such as tokenization or masking personal data in datasets. However, it may still be possible to de-anonymize personal data, especially when adding context from other sources to the original dataset. This auxiliary information may come from sources such as social media platforms or location data. Sensitive data may also be inferred from other data, with one of the most well-known examples being an algorithm predicting pregnancy from retail history a decade ago. Inferring sensitive data from datasets may also involve an adversarial attack; in an inference attack, the adversary may retrieve or reconstruct AI model’s training data or use parts of the training data to expose sensitive attributes in the dataset.

Differential privacy is not a specific process but a mechanism considered resistant to de-anonymization by auxiliary information. This mechanism uses a mathematical equation that treats all information as personal information. A company using the differential privacy mechanism has a “privacy budget” or “privacy loss,” which determines how much noise is added to query results. Adding noise ensures that no single piece of data can be traced back to an individual. This mechanism is used by many global companies in their products.

From an adversarial perspective, storing large amounts of personal data in a single repository is tempting, as the data can, for example, be sold or used in other attacks, such as identity theft. A newer concept, federated learning, offers a decentralized way of training new AI models, where the personal information never leaves the endpoint device, and only training results are shared. Security is enhanced by encryption at the endpoint device and the application of differential privacy techniques. Some of the privacy-enhancing technologies (PETs) awarded by NIST in 2023 already applied federated learning in areas such as crime detection and pandemic forecasting.

What could companies do to mitigate the privacy risk and stir customer trust?

Carefully consider whether personal data is necessary for training the model. Use anonymization and pseudonymization techniques and add noise to datasets where possible.

Ensure that basic information security measures, such as robust identity and access management practices, are in place. Encrypt personal data.

Conduct AI threat modeling, vulnerability scanning and penetration testing. Implement anomaly detection and behavioral monitoring techniques to identify unusual extraction activities.

Regularly update and retrain machine learning models to changing attack vectors.

The human error factor is always present but can be reduced with employee training.

Can we trust that AI will treat us fairly?

The challenges with “big data” are hardly new: The data used to train a model may be biased due to inherent biases in the data itself, and the AI model may have been built with criteria that lead to unfair outputs for those to whom it is applied. Several real-world examples highlight how algorithms can be biased. Some of the most well-known cases include a hiring algorithm, which was discriminatory toward female applicants, and the COMPAS algorithm, used in judicial proceedings in some US states, which was biased against minorities. To avoid these types of inherent biases, the EU AI Act (Art. 10) allows testing high-risk AI models (i.e., “AI systems that pose significant risks to the health and safety or fundamental rights of persons”) with special categories of data and data related to criminal data and offences “[…] in order to ensure the bias monitoring, detection and correction in relation to high-risk AI systems.” However, the regulation also states that the EU AI Act does not provide valid legal grounds for processing special categories of data or any other personal data. Therefore, a legal basis for testing high-risk AI systems with Art. 9 and 10 data should still be defined according to the limitations set by the GDPR.

AI typically involved a trade-off between accuracy and explainability. In other words, the better the algorithm performs, the less we can explain how it reached its conclusions. What happens to our rights if no one knows how the decision was actually made? Explainable AI (XAI) is a method that provides justification for decisions made by AI. Explainable AI in the financial sector has been further described by other EY colleagues in this post.

Malicious attacks can also be made to change AI decisions, affecting the fairness of the decisions made. An output integrity attack hijacks the real output and changes it to a false one, resembling a traditional “man in the middle” attack. In a data poisoning attack, the raw data, training data or test data of an AI model can be tampered with to make the AI model work in a way favorable to the attacker. Data poisoning can be conducted, for example, via model skewing, where the attacker pollutes training data so that the model ends up mislabeling bad data as good data. AI models typically have a feedback loop, which can also be weaponized to poison the data behind the AI model.

To support human oversight of decision-making, the European Union Artificial Intelligence Act (EU AI Act) states that human oversight is strictly necessary for high-risk AI systems. AI systems classified as “high-risk” include those used in immigration, law enforcement, employment, education and the medical sector. The Act also poses requirements for data quality (Art. 10), transparency (Art. 13) and technical solutions to address AI-specific vulnerabilities (Art. 15) on a general level.

What could companies do to mitigate the privacy risk and stir customer trust?

Use sensible data sampling. Conduct thorough testing with test cases also based on the risk assessments conducted.

Explain decisions made by AI, and remember the requirement to be open about your algorithms.

Avoid creating a direct loop between feedback and penalization.

Keep the “human-in-the-loop” in decision-making that significantly affects the data subject.

What the future may hold

The EU AI Act was approved by the Council of the European Union in May 2024, and an executive order on AI was issued in the US last year. It is expected that regulation on AI will increase and be supplemented with more specific guidelines. The near future might also bring more of the following:

AI fighting AI: We will likely see more AI tools on the market to supervise company environments against specific model attacks.

New ways for the communities to fight against stealing their data might also emerge. For example, a university in the US developed a Nightshade tool that allows artists to defend against non-consensual online scraping of their art. If content pixelated with the tool is used for AI model training, it will break the AI model.

The EU AI Act requires national authorities to create secure sandbox environments to guarantee secure development of AI, even for smaller startups.

Authorities may take punitive actions against AI systems, such as partial removal of data from the AI model. This process, known as algorithmic disgorgement, has been used by the US Federal Trade Commission (e.g., in the Everalbum case, where all algorithms trained with improperly obtained photos were also required to be deleted) and is also being discussed in the context of exercising the data subject’s “right to be forgotten” in AI models.

Summary

Artificial intelligence solutions pose risks to privacy that are, to some extent, specific to the technology. However, there are already means to mitigate privacy risks related to, for example, the acquisition and anonymization of personal data, as well as fairness and protection. As AI becomes increasingly regulated, authorities have the mandate and means to both support ethical AI and take punitive action against companies that do not comply with regulations.