High-risk systems cover a wide range of systems used in the private and public sectors. In November 2021 the Council of the European Union proposed an amendment to the AIA. The amendment stated that high-risk systems should also include insurers’ use of systems for premium setting, underwritings and claims assessments. This stance is further elaborated by the Council in the same proposal:

“AI systems are also increasingly used in insurance for premium setting, underwriting and claims assessment which, if not duly designed, developed and used, can lead to serious consequences for people’s life, including financial exclusion and discrimination.”

The consequences of the Council’s proposal, if passed, will be significant for insurers since it will encompass not only new AI models but also existing models used in pricing, underwriting and claims.

How to best prepare for the new AI regulation

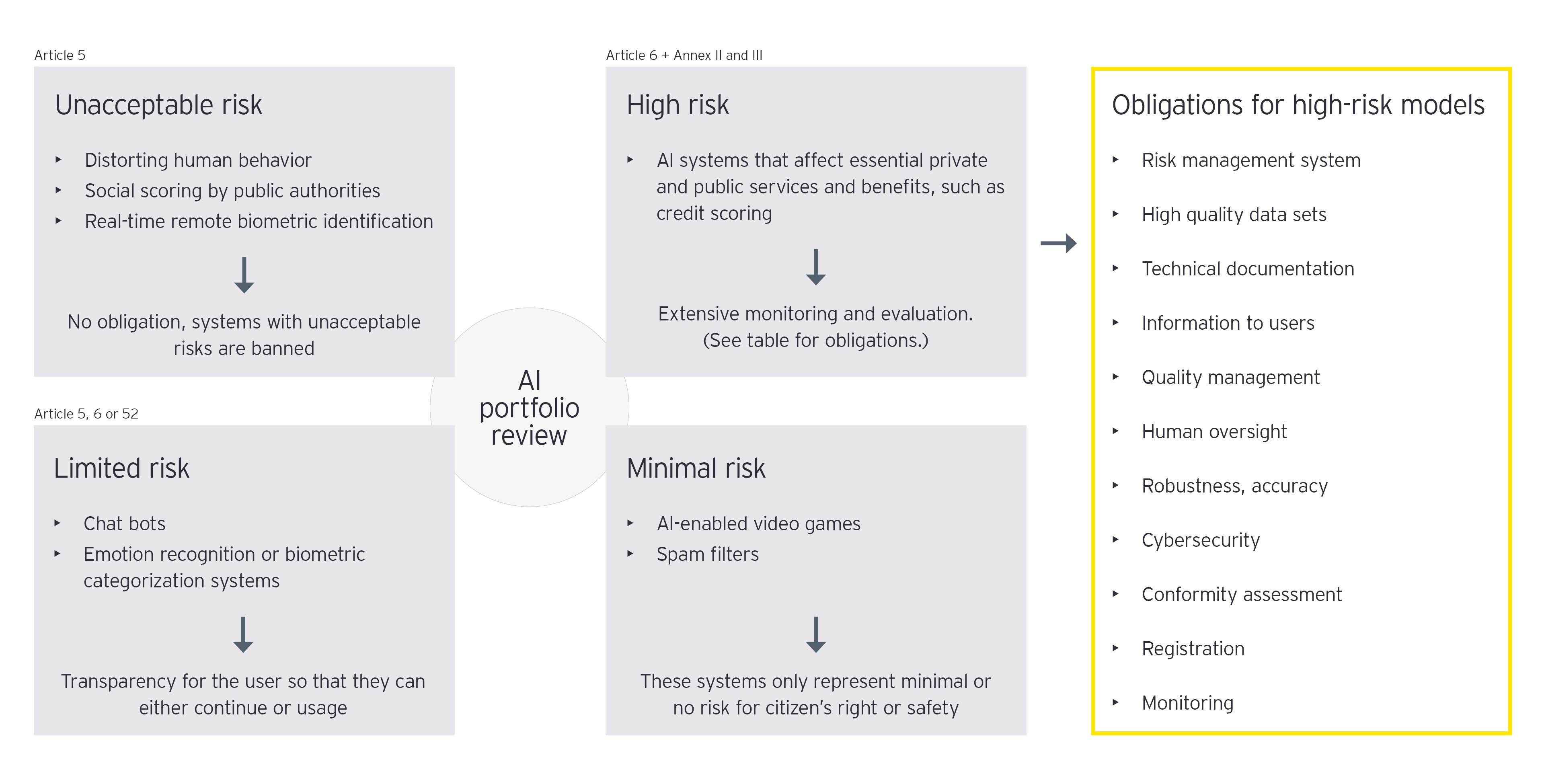

The AIA has not been passed yet and it may still be a few years until it takes full effect. However, as mentioned in figure 1, the change in risk from AI models is real and can have large consequences for insurers. Reputational risk is high if the data is used improperly, the model has a bias or decisions are made automatically, which may put the customer at an unlawful or unethical disadvantage.

For this reason and since compliance with AIA may require significant work involving many stakeholders, we recommend reviewing the risk and governance frameworks for data and models already.

Insurance companies should consider making an inventory of systems with applied AI models and for each model assess the risks and define key risk indicators, that will allow the company to track, prioritize and control related risks.

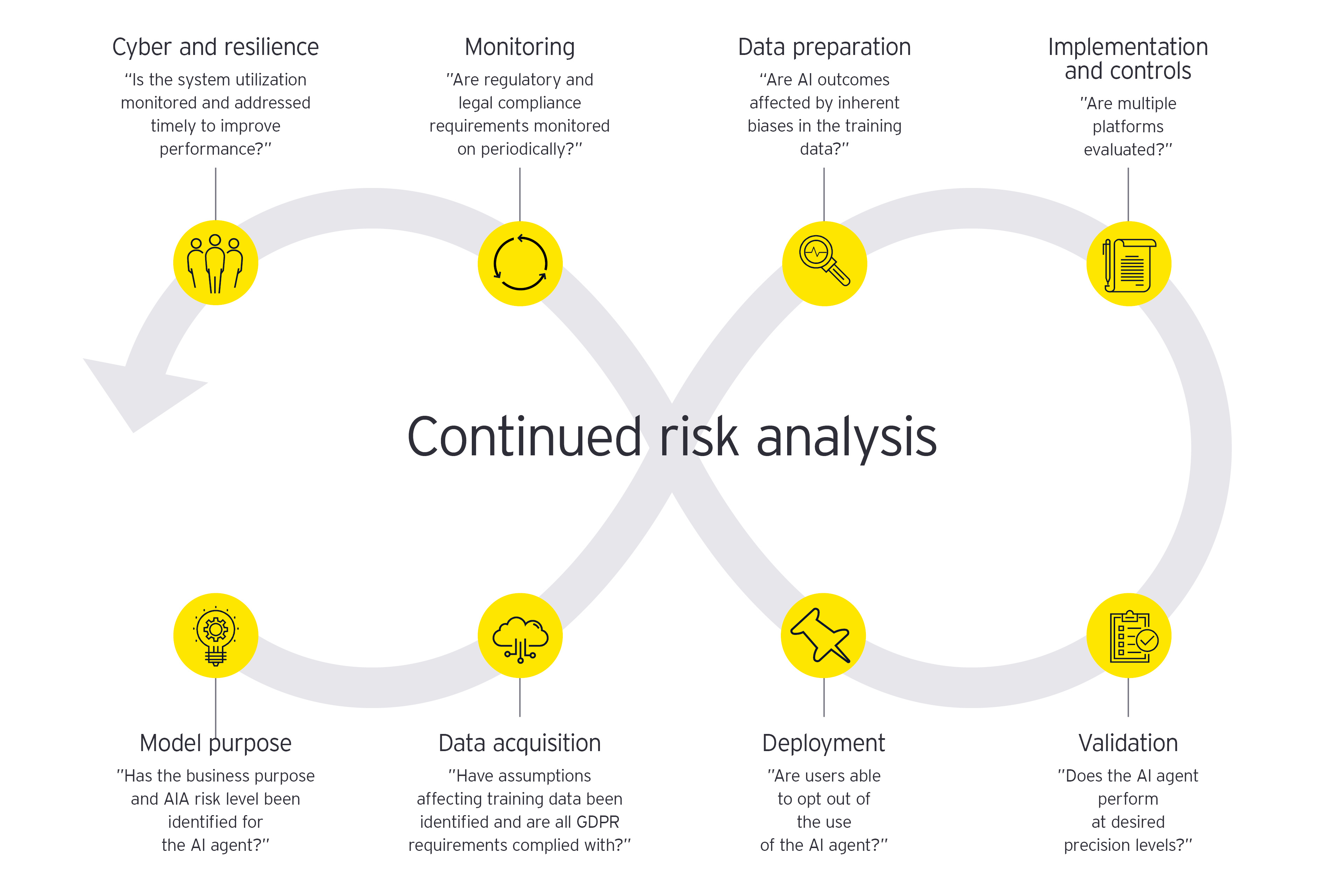

Examples of the questions that should be asked during the life cycle of an AI model can be seen in Figure 2.