EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Limited, each of which is a separate legal entity. Ernst & Young Limited is a Swiss company with registered seats in Switzerland providing services to clients in Switzerland.

How EY can help

-

The EU AI Act will be adopted shortly with far-reaching extraterritorial impact. As it is often more costly and complex to ensure compliance when AI systems are operating than during development, we recommend that firms start preparing now with a professional AI Act readiness assessment and early adaptation.

Read more

As AI accelerates, its ability to transform performance and productivity could translate into huge value in varied sectors, from banking and healthcare to consumer goods. However, for many businesses, the buzz around AI is yet to yield genuine breakthroughs. While organizations have adopted AI in piecemeal form or launched pilot projects, these important first steps are in reality a response to uncertainty. Now is the time to move from siloed projects to a cohesive and comprehensive strategic roadmap for transformation.

The challenge will be to define the organization’s AI strategy, the AI governance and to implement frameworks that really absorb and integrate this transformative change in as controlled and secure a way as possible. A cornerstone of this journey will be to maintain the organizations level of digital trust; getting this wrong could result in a loss of customers, market share and brand value. Conversely, those that get it right will be able to differentiate themselves from their competitors in the digital economy as they look to disrupt their business and enter new markets. But how can the organizations simultaneously transform its organization to such a large extent while maintaining the digital trust level?

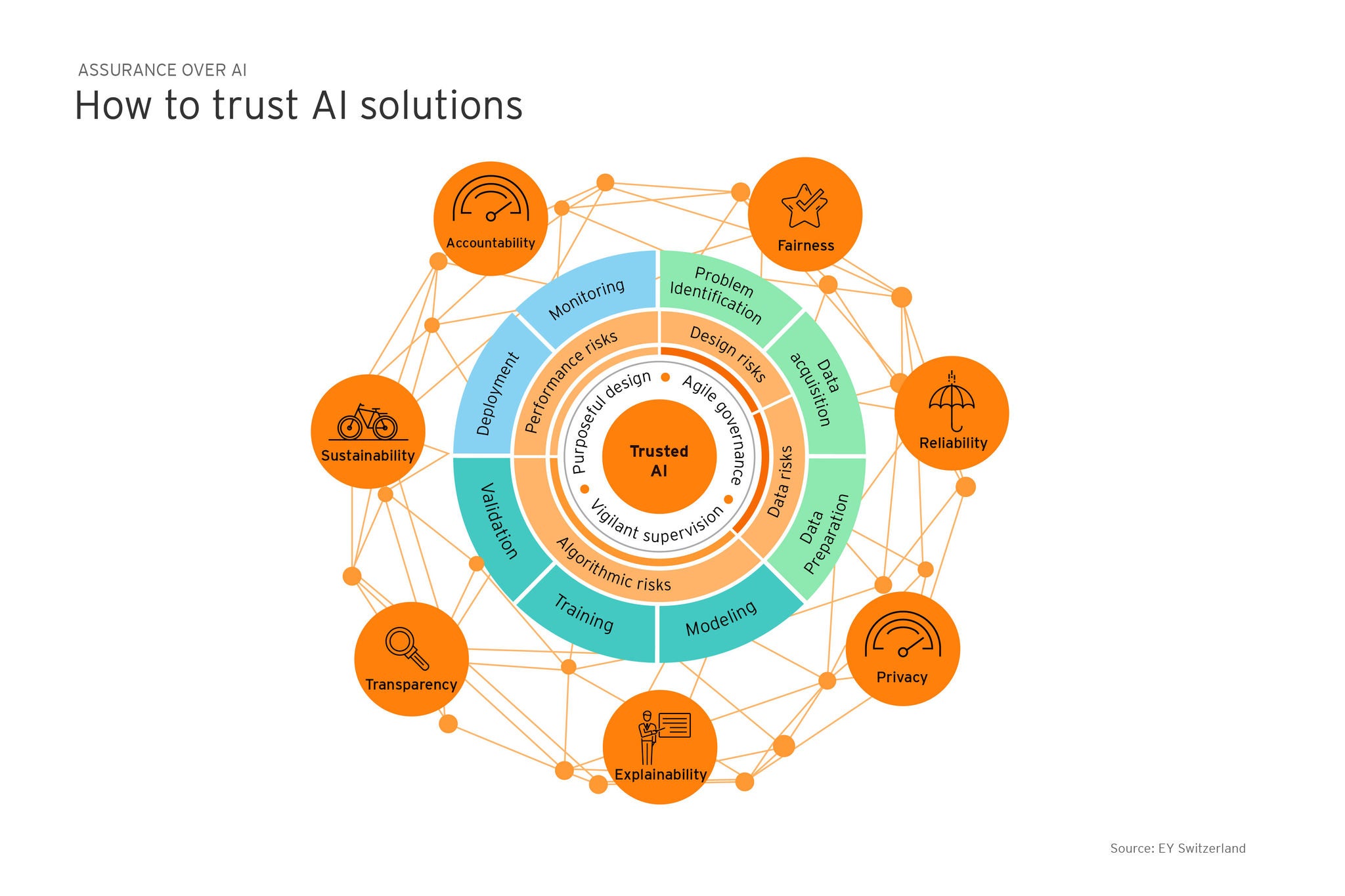

Trusted AI framework

With the risks and impact of AI spanning technical, ethical and social domains, a new framework for identifying, measuring and responding to the risks of AI is needed to build and maintain digital trust. The EY Trusted AI Framework with seven attributes is built on the solid foundation of existing governance and control structures, but also introduces new mechanisms to address the unique risks of AI.