EY refers to the global organisation, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

How EY can help

-

Discover how the EY–ServiceNow Alliance can help you streamline the flow of business information to become more dynamic and innovative to drive performance and growth.

Read more

The boardroom tension: Speed versus stewardship

- The executive view: Your leadership team has a dozen AI pilots proving their worth. The pressure to go live is intense.

- The board view: Directors want assurance. Where’s the risk register? How do we know the AI isn’t misrepresenting facts, introducing bias or breaching regulations? How will we track value and risk over time?

Both perspectives are right. Moving too slowly risks losing market share. Moving too fast risks public trust, regulatory penalties and reputational damage.

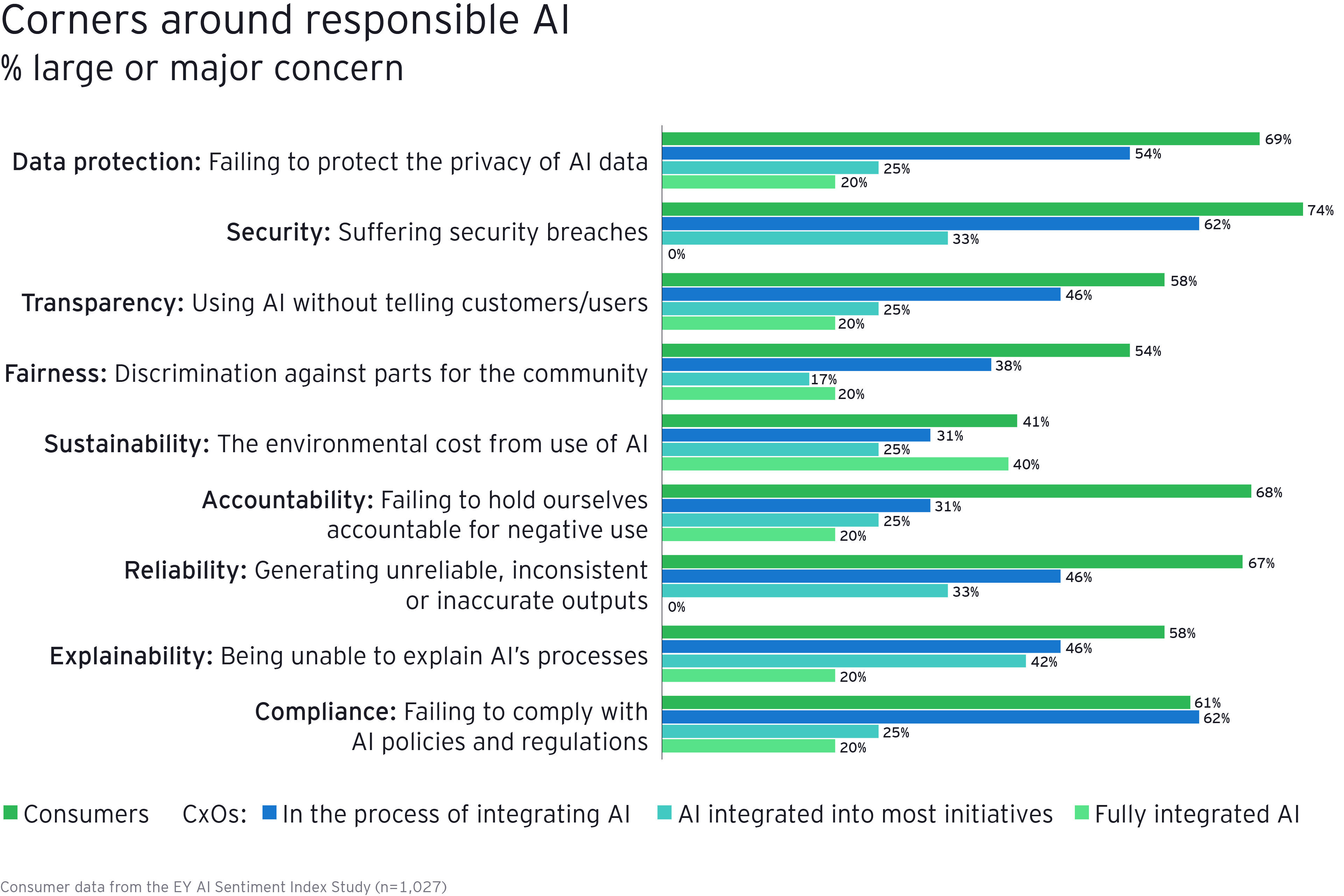

In reality, leaders’ familiarity with the risks is patchy. In some cases, just one in five leaders is moderately or extremely familiar with the risks of the technology they are already deploying, or plan to within a year.

Organisations are committing to rollouts without having the controls, policies or readiness to manage the risks.