EY refers to the global organisation, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

Realising the benefits of AI requires leaders to build confidence and agency – not just technology.

In brief:

- The EY AI Sentiment Index Study shows most people (82%) are already using AI to enhance how they live and work, but just 57% feel comfortable with it.

- AI’s potential excites people as much as it worries them. Leaders must tap into this enthusiasm while addressing real concerns.

- Great leaders help people engage with AI. When it’s relevant, intuitive and human, it’s transformative.

Artificial Intelligence (AI) has become an integral part of the way we live and work. The AI Sentiment Index Study, a global survey of over 15,000 people, shows that 82% of respondents had consciously used AI in the past six months. And many will have used or relied on AI without even realising it. This is not just a technology revolution – it’s a human one. AI is changing what people can achieve.

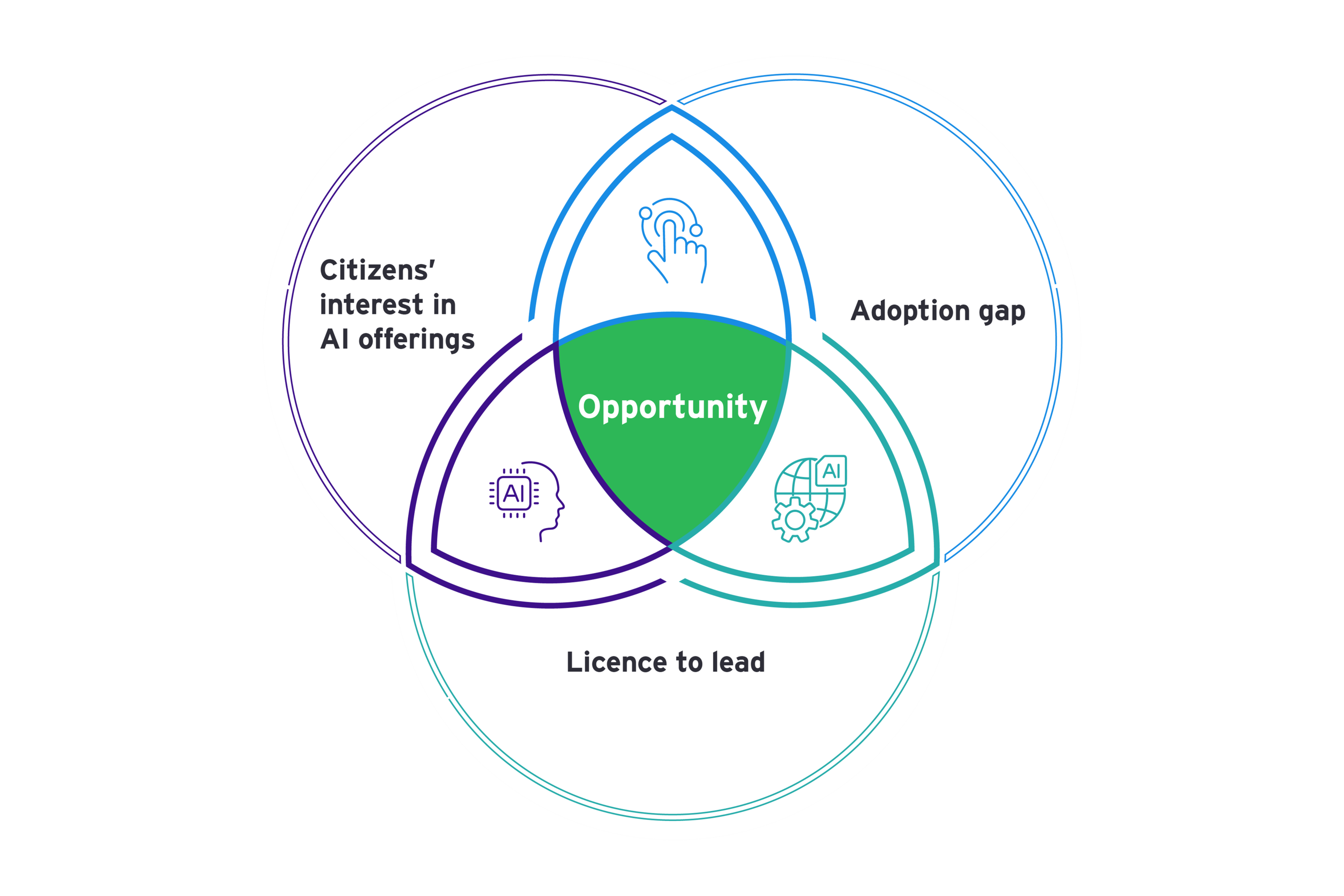

However, there is an adoption gap – a space between how much people are willing to use AI and how much they actually do. That is due to concerns around trust, privacy, and control, but it’s also about what’s currently available. Better AI tools matter, but it’s equally important to ensure that people want to use them, and that they see the value. Closing this gap is a significant opportunity for organisations.

This is where leadership is critical. Organisations that actively create confidence around AI, demonstrate its benefits, and empower people to engage on their own terms will put themselves in the strongest position – not just to implement AI, but to shape its role in business and society.

We call this the ‘Licence to Lead’. Organisations can earn and grow their licence by ensuring they use AI in ways that align with human needs and expectations, and enhance human potential rather than diminishing it.

This could be more of a challenge than many leaders imagine. As Erik Heller, EY Oceania Customer Insights Lead, says: “Although AI is progressing at a remarkable pace, trust in the organisations that aim to influence its future is still lacking in Australia and New Zealand. Trust in AI extends beyond the technology itself; it hinges on whether individuals believe that these organisations will leverage it in ways that genuinely benefit them”.

This report explores where adoption gaps exist, what it takes to close them, and how organisations can create a licence to lead, so they are best placed to benefit from AI now and to shape its future.

How a licence to lead can transform human potential in an AI world

Chapter 1

AI is reshaping our daily lives

Understanding how people feel about AI today reveals insights into its future.

People’s engagement with AI today reflects a focus on practicality. Most are not interested in AI in itself; they want to know how it can help them meet existing goals. Today, the most common uses are for straightforward, efficiency-driven tasks. Some applications are highly specific, such as managing electrical consumption, while others are more general and widely applicable, like learning about a topic or summarising information. When AI provides immediate tangible value, people are interested.

But AI adoption depends on confidence as much as it does on functionality, and there are clear boundaries to where people feel comfortable adopting AI today. More complex systems, tasks requiring personal data, or emotionally engaged interactions remain less commonly used. These applications often demand a greater level of confidence or user engagement, or they require people to use technologies in ways they don’t understand.

Overall, these boundaries – which we explore in more detail later – will shift as AI evolves. It’s important to track them, so business leaders can make decisions based on where people are now and where they are heading, not where they used to be. Leaders who assume AI adoption will follow a simple trajectory will miss the deeper reality: as AI becomes more powerful, it needs to become more trusted and more intuitive.

This is what the AI Sentiment Index does, by quantifying global levels of comfort with AI. Today, the global index score is 68 out of 100. Australia and New Zealand both sit below this, with 54 out of 100, and 53 out of 100, respectively.

Those who are most comfortable with AI are significantly more engaged – on average, they’ve used 15 different AI applications in the past six months, compared to six among those who feel neutral and just three among those who remain uncomfortable. The data highlights a reinforcing effect: those who feel comfortable with AI tend to explore more applications, gradually increasing their confidence and usage.

These early adopters provide a glimpse into AI’s future: they’re not only more accepting of AI-powered recommendations and automation but also more likely to appreciate AI-driven customer experiences, such as chatbots, and even social interactions.

Growing adoption is about ensuring the next wave of users feel empowered, not left behind. People lean toward AI when they understand it, and they understand it best when they have the chance to try it. As AI continues to embed itself into people’s lives, those who recognize the differences in how people engage with it – what excites them, what holds them back – will have the clearest view of where AI is heading next. Organisations that cultivate confidence – by creating safe opportunities for people to explore AI – will be best positioned to accelerate adoption and shape AI’s role in society.

Key question: How are you designing AI experiences that align with real human needs, rather than assuming adoption will happen on its own?

Chapter 2

Attitudes about AI are deeply personal

Understanding AI sentiment across six key personas highlights both opportunities and risks.

AI adoption and sentiment are not uniform around the world. Demographic factors like age, education, and geography play an important role in how people are relating to AI. But psychographics – how people think, what they value, and their emotional response to technology – are just as critical. The AI Sentiment Index reveals significant global variation in all these areas, highlighting both opportunities and risks for businesses. AI is not a one-size-fits-all story – it’s a deeply personal, context-driven experience.

At a national level, AI sentiment varies widely. Skepticism remains more pronounced in Australia (54) and New Zealand (53), which both sit at the lower end of the Index. It’s countries like India and China that are leading the way, with sentiment scores of 88, reflecting optimism and deep AI integration into daily life. These differences reflect more than just policy or infrastructure – they reveal how different societies are internalising AI’s role in their futures.

To better understand these variations, we identified six distinct AI sentiment personas. These provide a useful way of mapping global differences in AI engagement – from those who are most excited to those who remain deeply skeptical.

- Cautious optimists: Welcome AI’s potential while remaining mindful of risks.

- Unworried socialites: Embrace AI’s benefits with few reservations.

- Tech champions: Frequently use AI and see long-term benefits but still advocate for regulation.

- Hesitant mainstreamers: Express concerns about data privacy and transparency but recognise the benefits AI could bring to society.

- Passive bystanders: Express concerns about misinformation and maintain an ambivalent attitude toward AI’s adoption and impact.

- AI rejectors: Resist AI altogether, prioritising human connection and advocating for strict regulations.

Oceania, European and North American countries have lower concentrations of tech champions than in the Middle East and Asia.

Our study highlights a fundamental reality: discomfort with AI does not mean disengagement. People are still finding ways to use it, even as they question its broader implications. For example, hesitant mainstreamers worry about data privacy, but 56% of Australians and 51% of New Zealanders agree AI makes it easier to complete technical or academic tasks. Even passive bystanders, who engage less frequently with AI, still interact with it in some form.

People with concerns about AI still recognise its benefits – apart from those who reject it outright. They find ways to engage with AI where they see clear value. For some organisations, this is a moment of strategic choice: do you see AI hesitation as a barrier, or as an opportunity to build familiarity and confidence? Can you address concerns, while recognising and building on the desire people have to embrace AI?

For businesses and governments alike, these personas provide a powerful lens for understanding where the opportunities are. They also show the value of helping people feel empowered to use AI, rather than convincing them to do so. Organisations that recognise this will not just drive adoption, they will shape AI’s role in society. “Many Australians and New Zealanders worry about how AI might harm vulnerable and at-risk segments in our communities,” says Katherine Boiciuc, EY Regional Chief Technology and Innovation Officer, Oceania. “This concern must be address through targeted upskilling programs and increased innovation investment with equitable access so that no one is left behind.”

Key question: What are you doing to make AI feel tangible, useful, and relevant to the people you serve – whether employees, customers, or citizens?

Chapter 3

AI must support people, not diminish them

What do people trust AI to do, and where do they draw the line?

Across multiple areas of life and work, people’s openness to AI is higher than their current level of engagement. This is the AI adoption gap. It represents a significant opportunity, and closing it is about more than access to technology.

AI use today is concentrated in certain key areas. It’s highest in customer experience (CX), with 29% of Australians and 33% of New Zealanders using AI to access customer support. Yet even in sectors where AI adoption is lower – such as energy or financial services – the AI Sentiment Index shows that people are open to AI playing a role.

Many are likely already using services or experiences driven by AI, but without realising it. As Blair Delzoppo, EY APAC FSO Technology Consulting Lead, notes: “Promisingly, our research indicates that in Australia and New Zealand, there is a strong level of comfort among individuals regarding the use of AI by financial institutions for fraud protection. However, the limited number of respondents who report having utilised AI for this purpose highlights the need for financial institutions to enhance their efforts in educating customers about the ways AI is actively supporting them.”

Some of the most promising AI applications validated by our study align with areas where businesses are actively developing solutions. These include:

- Media and entertainment: Personalised content recommendations

- Technology: Managing smart devices

- Retail: Accessing customer support

- Health: Diagnosing symptoms

- Financial services: AI-driven financial wellness

In developing these solutions, it’s valuable to note this finding from our study: agency is as important as privacy. People are more comfortable with AI in monitoring and preventative applications and become wary when AI handles personal data or makes decisions on their behalf. For example, they are relaxed about AI monitoring that keeps a vehicle up-to-date with maintenance or prevents shoplifting, but they become highly uncomfortable with AI monitoring that’s trying to improve shopping experiences or recommend ways of making employees more efficient. In Australia, only three in ten people feel comfortable with AI being used to monitor employees for efficiency, analyse resumes for hiring, or assess employee performance. For New Zealand, this drops to two in ten people. Even younger generations – who tend to be more comfortable with AI in general – remain hesitant when it comes to AI’s role in workplace decision-making.

Openness to AI declines even further when the technology is used to make decisions that humans would normally make. While 52% of Australians and 50% of New Zealanders are comfortable with AI preventing crime, only 32% and 26%, respectively, are comfortable with AI making legal decisions. In healthcare, 47% of Australians and New Zealanders support AI predicting health issues, but only 26% and 20%, respectively, trust AI as a medical practitioner.

Discomfort is about the role people play in AI-driven systems, not the technology itself. The fear isn’t so much about AI replacing people, it’s about AI diminishing the value of people thinking critically, making choices, and having autonomy. That’s why bridging the adoption gap requires not just technological advancements, but a nuanced approach that aligns AI’s evolution with real human concerns and expectations. It also requires a mindset shift – not “How do we convince people to use AI?” but “How do we create the conditions where people want to use AI?”

“By fostering understanding and dialogue, we can cultivate the social licence necessary to drive the development of world-class AI solutions that benefit all,” says Katherine Boiciuc, EY Regional Chief Technology and Innovation Officer, Oceania. “As we navigate this rapidly changing AI landscape, it is imperative that leaders lean towards AI and be proactive in addressing the trust deficit and skills gap posed by AI.”

Empowerment is critical. Are people learning to use AI because they fear being left behind, or because they see how it makes them better at what they do? Organisations need to create space for people to explore AI on their own terms – safe opportunities to experiment, learn, and build confidence. Adoption doesn’t happen through reassurance; it happens through experience. AI is a social change, and like any social change, it will take root when people feel ownership of it.

Key questions:

- How are you creating opportunities for people to explore and engage with AI in ways that are meaningful to them?

- What steps are you taking to bridge the trust deficit, ensuring people feel AI is working in their best interests? What are you doing to create safe, low-risk opportunities for people to play, experiment, and develop real confidence with AI?

Chapter 4

Control or agency — what really matters?

The way leaders design AI systems will determine whether AI improves human decision-making or erodes it.

If people feel more confident with AI, what might become possible? How ready are people to let AI take a more autonomous role in their lives? Beyond simple automation, AI can take proactive steps to assist, predict, and personalise experiences. The technology is evolving rapidly, but it still largely operates at a broad, reactive level. So, AI serves up ads based on past searches, recommends content based on general interests, and nudges people toward familiar consumer choices. It doesn’t yet anticipate real-life context or deeply understand individual needs in a meaningful way. The next frontier isn’t just AI that reacts, but AI that truly aligns with human intent and aspirations.

Could an AI agent be trusted to order groceries based on what it knows about someone’s schedule, tastes, health goals, and what’s already in their kitchen – without human input? Would people be comfortable with AI making high-stakes, personalised decisions on their behalf? And if so, who would they trust with the personal data needed to make that happen?

The data suggests that while people are open to AI playing a greater role, boundaries around decision-making exist. The majority of people in Australia and New Zealand are comfortable with agentic AI predicting emergency situations (58% and 55%, respectively) or protecting against fraud (52%). But even in areas where AI could enhance efficiency, such as evaluating insurance or fraud claims, comfort levels remain moderately low at 31% in Australia and 28% in New Zealand.

People still want humans in control over decisions that shape their lives. They are reluctant to let AI fully replace human judgment in high-stakes personal interactions. And while AI-driven personalisation is widely used today, just 23% of New Zealanders are comfortable with companies using their personal data and past behaviors to make tailored product or service recommendations. In Australia, this sits slightly higher at 33%.

This is the “social paradox” of AI adoption: many people enjoy interacting with AI and see its benefits, yet at the same time, they fear it eroding human agency, decision-making, and connection.

A human-centred approach is critical to using AI to reimagine how we work, innovate and create value

Jenelle McMaster

EY Regional Deputy CEO and People & Culture Leader, Oceania

Yet in some areas people are already accepting AI’s ability to make complex, real-time decisions. For example, modern autos are full of technologies to help people drive better. In our study, 54% of global respondents would be comfortable with AI optimising their navigation or driving. In Australia, these comfort levels sit at 44% and in New Zealand it’s slightly lower at 37%. Services like Waymo One, which now offers fully autonomous ride-hailing in major U.S. cities, show that AI-powered driving isn’t just theoretical – it’s already on the roads. Cities like Los Angeles are using AI to analyse traffic patterns and optimise traffic light timings, reducing congestion.

The same principle is emerging in B2B applications, where manufacturers and retailers are using AI for touchless automated ordering and supply chain digital twins, anticipating demand and responding dynamically. In these examples, and others like them, AI is enabling people to make more strategic, high-value contributions.

AI is also reshaping what we might think of as uniquely human forms of interaction. For example, 58% of Australians and New Zealanders comfortable with AI in our study believe talking with AI can help some people develop better social skills, and 33% say chatting with an AI companion can be as enjoyable as talking to a human. Among our six personas, 13% of cautious optimists and unworried socialites say they have formed an emotional connection with AI in the last six months.

At its best, AI is not just an impersonal machine or a functional tool – it’s an enabler, helping people connect, learn, and create. The opportunity lies in ensuring AI supports human connection rather than replacing it, helping people to feel more confident in using AI while creating meaningful ways for people to interact, learn, and grow. “A human-centred approach is critical to using AI to reimagine how we work, innovate and create value,” believes Jenelle McMaster, EY Regional Deputy CEO and People & Culture Leader, Oceania. “Human-centred means giving attention and care to how humans feel and are impacted by tools and decisions. We know that even as people increasingly use AI for writing, research or reviewing their daily work, they remain wary of it as a concept. Supporting your team to learn and grow their careers with AI as an indispensable sidekick will help your organisation outperform in the future.”

This is where an AI-first mindset becomes critical. Organisations that focus only on AI’s capabilities – without considering the humans who engage with it – will struggle to drive adoption. Success depends on making AI intuitive, empowering, and embedded in ways that amplify human agency rather than undermine it.

Key questions:

- How are you ensuring AI strengthens human agency – helping people do more, not just overseeing the machine?

- How are you positioning AI as a tool for enhancing human connection, rather than one that risks replacing it?

Chapter 5

Licence to lead: Do you have what it takes?

AI’s future will be shaped by leaders who build confidence, empower people, and create a bold vision of AI as a tool for human potential.

While many people are enthusiastic about – or at least open to – a greater role for AI in their lives, this confidence might prove fragile. Even among those who feel comfortable with AI, concerns remain – particularly around misinformation, data privacy, and the need for clear human oversight. More than three quarters of respondents (80% in Australia, 85% in New Zealand) worry about AI-generated false information being taken seriously, 72% of Australians and 74% of New Zealanders fear AI will become uncontrollable without human oversight, and 68% in both countries are concerned about AI training on personal data without consent.

The trust deficit is not just a risk – it’s a defining strategic challenge. Across industries, people are uncertain whether businesses will manage AI in ways that truly serve them. Even in technology, where AI innovation is most advanced, trust sits at just 35% in Australia and 31% in New Zealand. Financial services (31% and 30%, respectively), healthcare (38% and 35%, respectively), and consumer goods (44% and 30%, respectively) show similar patterns. Government (34% and 23%, respectively) and media (26% and 21%, respectively) – two areas critical to AI’s role in public life – are even lower, reinforcing concerns about AI’s impact on information integrity and governance. For leaders, the question is no longer, ‘Will people trust AI?’ but ‘How will we earn and sustain their confidence at scale?’

This is what leadership in AI truly means. It’s not just about implementing the technology, but about shaping a future where AI expands human potential. The organisations that succeed will be those that recognise AI’s real power lies not in automation, but in augmentation – elevating what people can achieve rather than replacing them.

“Leaders must prioritise practices like keeping humans in the loop at key points,” says Jenelle McMaster, EY Regional Deputy CEO and People & Culture Leader, Oceania. “This includes implementing thorough testing to identify and address biases, establishing strong safeguards against misuse, and equipping our workforce for the future of work.”

Organisations that succeed in AI will be those that balance innovation with responsibility. Addressing fears around misinformation, bias, and privacy is a prerequisite for adoption. This means proactively tackling concerns rather than reacting to criticism, and committing to clear oversight, transparency, and ethical AI practices – not just in principle, but in execution. But true leadership requires more than just mitigating risk – it requires a bold vision of AI as a catalyst for human ingenuity, imagination, and progress.

This is your licence to lead. Not because AI demands new governance models, but because it presents a once-in-a-generation opportunity to transform what’s possible. The organisations that lead in AI won’t be those that build the best tools – they will be the ones that empower people to do their best work , to think bigger, to create more, to solve harder problems. The future of AI is not about the technology itself – it’s about what humans will achieve with it.

Key questions:

- What are you doing to build an AI-first mindset within your organisation, making AI feel like a natural, empowering part of work?

- What bold vision are you setting for how AI will transform what’s possible for people, not just processes – and how does that shape your licence to lead?

- How are you ensuring AI enables creativity, problem-solving, and human ingenuity, rather than just improving efficiency?

- How are you helping your workforce develop the habits, mindsets, and skills that will allow them to thrive in an AI-powered world?

Summary

AI has become a fundamental part of daily life, yet gaps in trust and engagement persist. Organisations that bridge these gaps – by making AI intuitive, relevant, and empowering – will lead the way. Success depends on more than technology; it requires a bold vision for how AI can expand human potential. Leaders who create confidence, enable exploration, and embed AI in meaningful ways won’t just drive adoption – they’ll define AI’s role in shaping the future. The opportunity is clear: the time to lead is now.

Related articles

How can CMOs win the balancing act created by generative AI?

CMOs are leveraging GenAI for marketing success and to create impactful customer experiences. Read more on studio.ey.com.

How can we upskill Gen Z as fast as we train AI?

An inside look at how literate Gen Z is in AI; how Gen Z understands and uses AI. Learn more.

Rethink customer experience as human experience

Technology is defining customer experience but differentiation comes from connecting to what is essentially human. Read more on studio.ey.com.