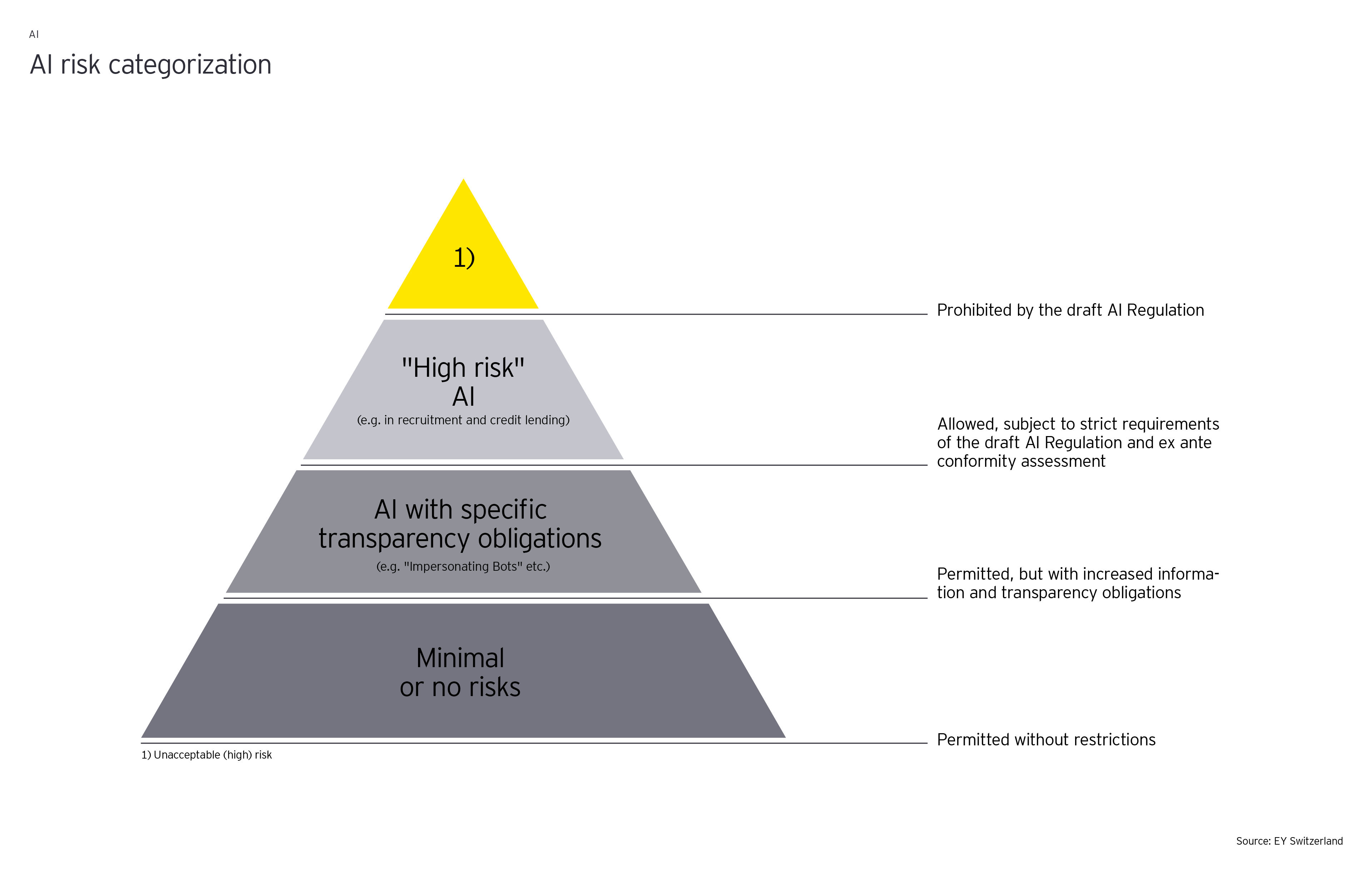

AI systems with an unacceptably high risk: For instance, AI systems or applications that manipulate human behaviour in order to circumvent the free will of the users. Such AI systems are prohibited under the draft AI Regulation.

AI systems with a high risk: The draft AI Regulation recognizes various systems that could fall under this category. Of relevance are, for instance, the AI systems in the area of workforce management intended to use for the recruitment or selection of individuals, in particular for the advertisement of jobs, the screening or filtering of applications, the evaluation of candidates in the context of interviews or tests, and AI intended to be used for decisions on promotion and dismissal or task assignment and performance monitoring. In the area of "access to and use of essential private services", AI systems designed to assess the creditworthiness of individuals or to determine their creditworthiness (excluding AI systems placed in service by small providers for their own use) are also considered high risk. The high risk of such systems lays especially in their potential of a discrimination on the basis of the algorithms used.

In general, "high-risk" AI systems are allowed, subject to the strict requirements of the draft AI Regulation and an ex ante conformity assessment (third-party conformity assessment activities, including testing, certification and inspection).

AI systems with specific transparency obligations: AI systems that fall under this category are for example chatbots and so-called impersonator bots. These AI systems are permitted, but with increased information and transparency obligations such as an obligation of the user and the provider to provide concise, complete, correct and comprehensive information about:

- The identity and the contact details of the provider (or its authorised representatives),

- Characteristics, capabilities and limitations of performance of the high-risk AI system,

- Changes to the AI system and its performance,

- The human oversights measures,

- The expected lifetime of the high-risk AI system and any necessary maintenance and care measures to ensure the proper functioning of that AI system, including as regards software updates

AI System with minimal or no risks: These AI systems are permitted without any restrictions under the draft AI Regulation.

What to pay attention to from a data privacy perspective?

Since the draft AI Regulation complements the GDPR, using or deploying an AI system will need to comply with both regulations. In addition, Swiss organizations (or other organizations whose activities fall under the scope of the revised Swiss Federal Act on Data Protection (revFADP)) will need to observe the Swiss data privacy requirements as well.

In addition to the GDPR and revFADP requirements, the draft AI Regulation introduces further or supplementary obligations, in particular in the area of: (i) risk management, (ii) data and data governance practices, (iii) technical documentation, (iv) record-keeping, (v) transparency and provision of information to users, (vi) human oversight, (vii) accuracy and robustness, (viii) cybersecurity. From an accountability perspective, the AI system provider must be able to demonstrate compliance with these requirements.

Data Governance and Management practices

Pursuant to the draft AI Regulation, "high-risk" AI systems must be developed in such a way that they meet the respective requirements of data quality. As the accuracy of an AI system strongly depends on the quality of data, the data sets are subject to specific data governance and management practices such as training, validation and testing. These practices apply to the relevant design choice, data collection, relevant data preparation processing operations, examination of possible biases, the prior assessment of availability and identification of possible data gaps.

AI systems-based decisions are expected to be fair, non-biased and non-discriminatory. That is why a mechanism to prevent biases should be embedded from the onset in the AI systems.

Transparency and information duty

The operation of the "high-risk" AI systems should be sufficiently transparent to enable users to interpret the system's output. Users need not only to be able to identify the respective AI system but also to understand what data the AI system has been trained with. Thus, the concept of transparency can come up at two points in time. First, when the user’s information is inputted in the AI system (ex-ante transparency) and second, after the AI system has been applied to the user to deliver specific outcomes (ex-post transparency).

The AI systems also have to be accompanied with concise, concrete and clear instructions for use. This information duty goes hand in hand with the transparency obligations under the Art. 13 and 14 of the GDPR (or Art. 22 of the GDPR with respect to the automated decision-making with legal or other significant effects on data subject), and Art. 21 of the revFADP (or Art. 25 of the revFADP where automated decisions are foreseen).

Especially in the light of use of "high-risk" AI systems, such as creditworthiness checks, KYC tools or tools for the evaluation of potential employees in the recruitment process, organizations must ensure that users are aware of the interaction with the AI system (unless it is obvious from the context) and must provide concise and complete information about relevant technical functions.

What is the risk for organizations if they fail to comply?

Organizations that fail to comply with the draft AI Regulation requirements may be subject to a variety of sanctions, including administrative fines of up to EUR 30 million or 6% of a company's total worldwide annual turnover.

What are the next steps?

The AI Regulation, once adopted, is expected to have a significant impact on AI regulatory approaches across multiple countries. Considering the increasing trend of use of AI by organizations in different areas of activity (finance, KYC, credit checks, HR, big data, machine learning tools, boots, security, etc.), it is recommended to take the upcoming regulatory requirements very seriously.

Organizations should focus mainly on:

- Considering AI regulatory requirements in the early stage of deployment of their AI systems or tools.

- Specifically in relation to automated decision-making, carrying-out a gap-assessment of all existing systems and identifying which could be impacted by the new AI rules.

- Adequately assessing and documenting the results.

- Applying a risk-based approach.

- Start early with the implementation.

Given the anticipated higher effort and resources which organizations will have to invest in order to comply with the new AI requirements, early preparation is crucial, especially for large organizations.

Many thanks to Lucia Batlova, Laura Grünig and Timea Nagy for your valuable contribution to this article.