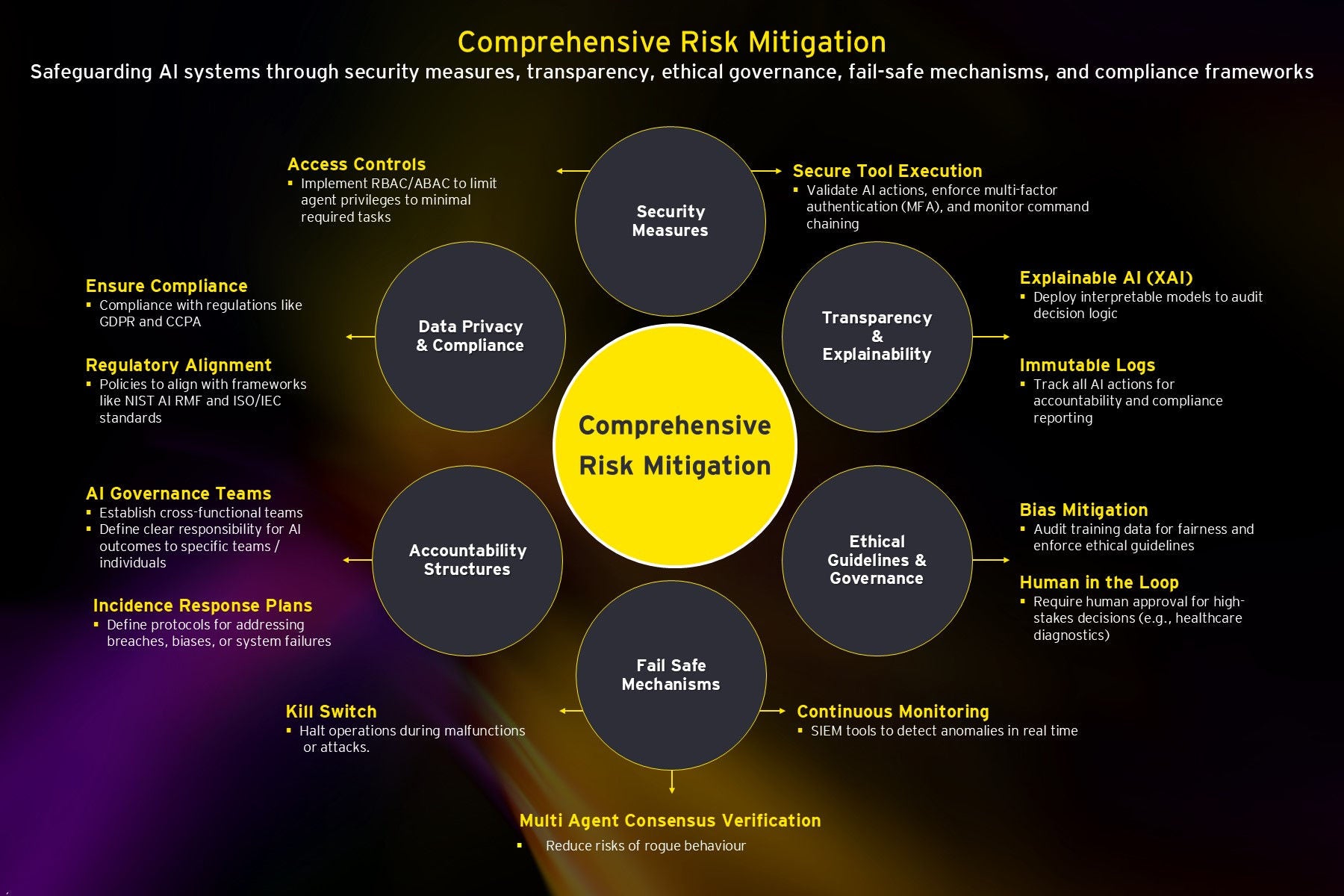

The enhanced framework builds on the foundation laid by the EY AI risk and governance framework and is designed to be multi-dimensional, addressing risk across eight core domains, which create an integrated shield of controls and oversight.

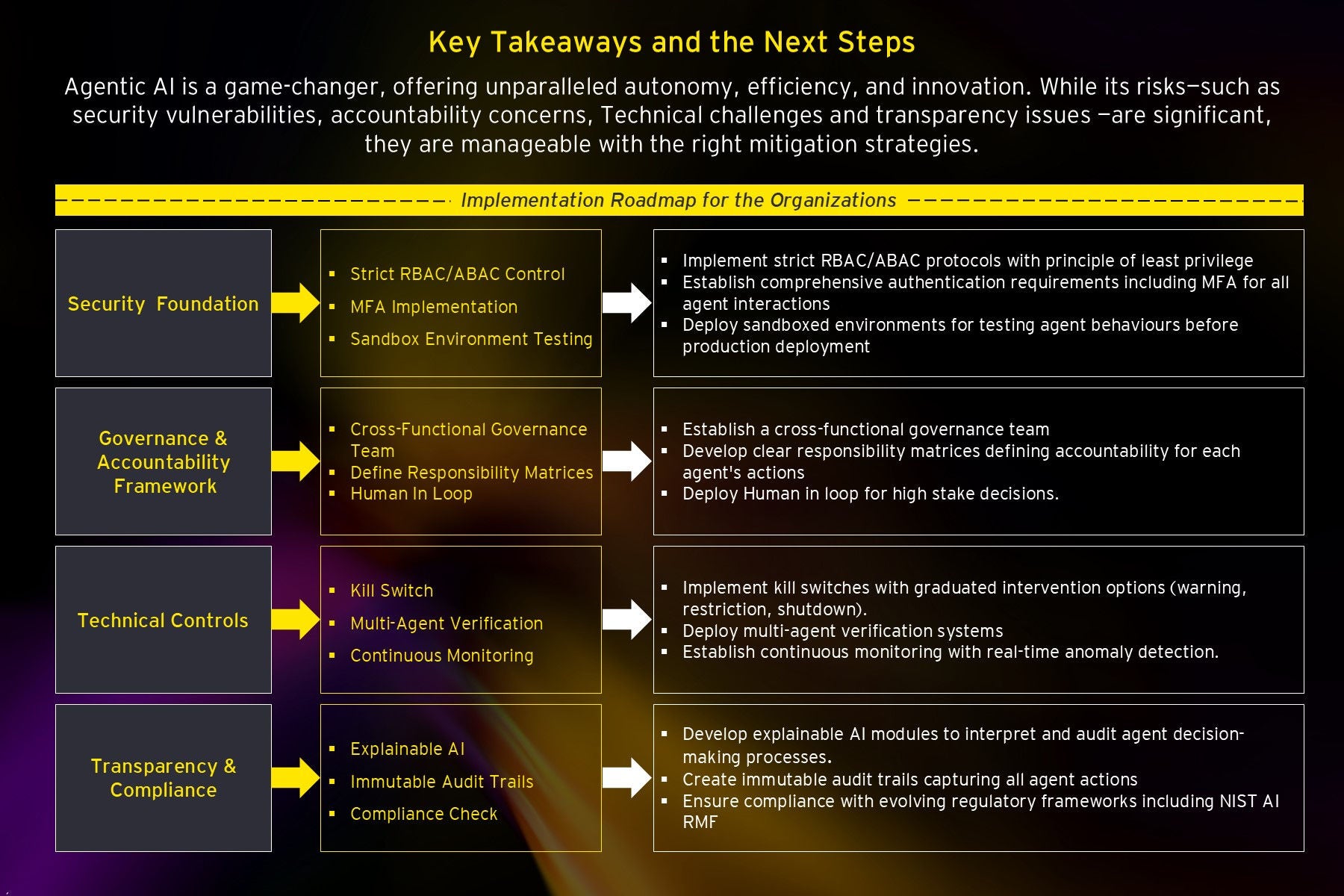

Implementation roadmap for organizations

Implementing a risk framework for Agentic AI applications has four key pillars: Security foundation, governance and accountability framework, technical controls, and transparency and compliance.

Security foundation

The pillar aims at safeguarding AI systems from vulnerabilities and unauthorized access. This includes:

- Implementing strict Role-Based Access Control (RBAC) / Attribute-Based Access Control (ABAC) control protocols with the principle of least privilege to limit access to sensitive AI functions.

- Enforcing Multi-Factor Authentication (MFA) for all agent interactions, adding an extra layer of security.

- Testing AI agents in a sandbox environment to identify and mitigate behavior risks.

- Detecting and preventing unauthorized activities through command chain monitoring.

Governance and accountability framework

This pillar focuses on managing AI risks effectively, including:

- Establishing a cross-functional governance team with diverse specializations.

- Defining responsibility matrices for each agent's actions.

- Maintaining human in the loop to ensure human oversight for high-stakes decisions.

- Regularly auditing AI systems for fairness and enforce ethical guidelines to prevent discrimination.

- Defining protocols in incident response plans to address breaches or system failures and minimize damage.

Technical controls

The pillar is responsible for monitoring, controlling and intervening during AI operations. This includes:

- Implementing kill switches with graduated intervention options (e.g., warnings, restrictions, shutdowns) to halt AI operations in case of anomalies or risks.

- Deploying multi-agent verification systems for interactions between multiple AI agents so that collaboration does not lead to unintended consequences.

- Establishing real-time anomaly detection systems to flag deviations.

- Deploying security event management (SEM) tools to detect anomalies in real-time and ensure that the AI system operates within predefined parameters.

Transparency and compliance

The pillar enables AI systems to be interpretable, auditable, and compliant with regulatory standards. For instance:

- Developing AI modules capable of explaining their decision-making processes.

- Creating immutable audit trails of all agent actions; facilitating forensic analysis in case of incidents.

- Enabling adherence to leading compliance standards by aligning organizational policies with frameworks such as NIST AI RMF, ISO/IEC standards, and regulations like EU AI Act, GDPR and CCPA.

These pillars integrate all components to safeguard Agentic AI systems and ensure their responsible operation within the organization.