EY refers to the global organization, and may refer to one or more, of the member firms of Ernst & Young Global Limited, each of which is a separate legal entity. Ernst & Young Global Limited, a UK company limited by guarantee, does not provide services to clients.

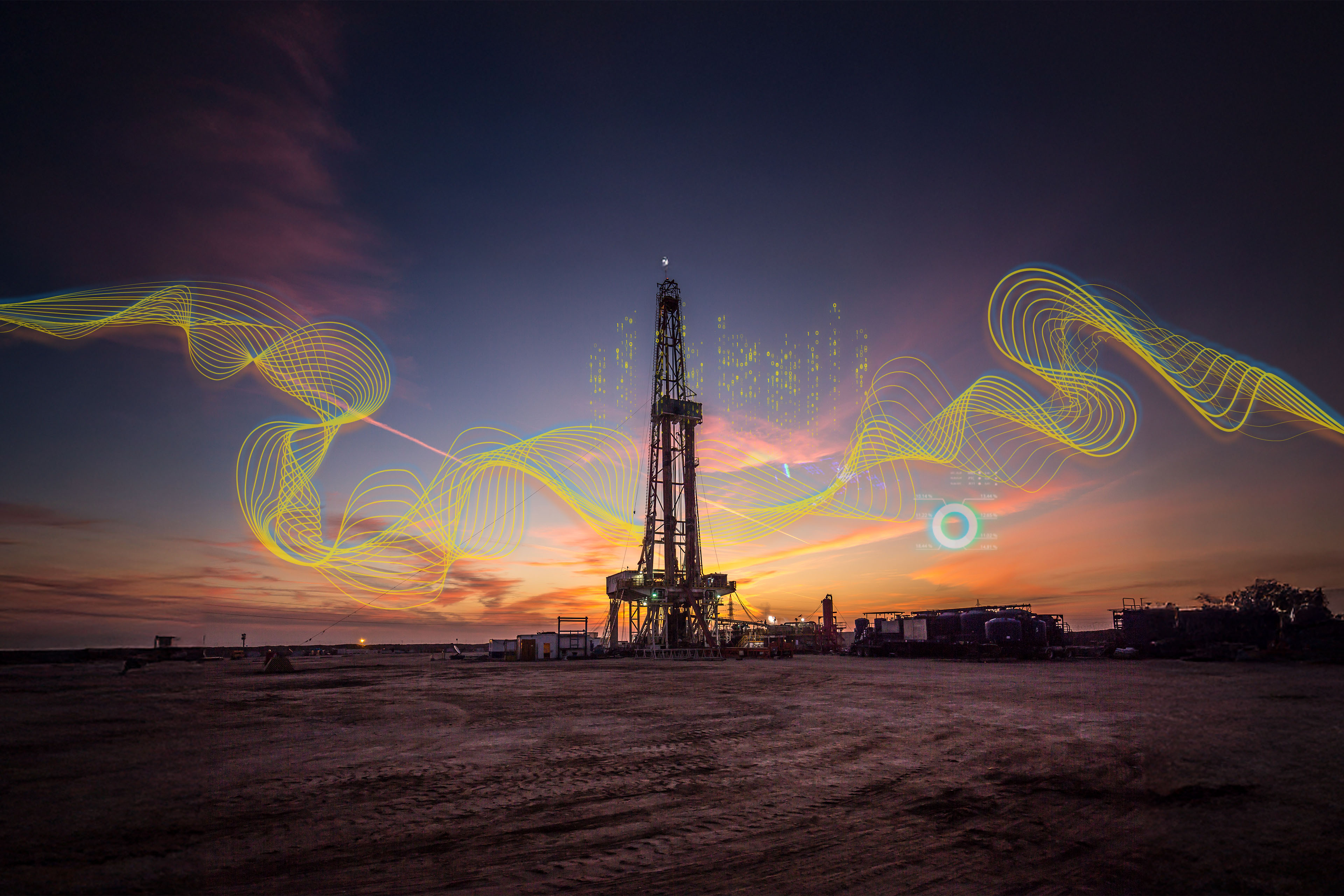

AI is transforming the oil and gas industry. See how leading innovators are making it happen — and how they see the future evolving.

After years of effort, the artificial intelligence (AI) era in the oil and gas industry is entering an exciting new phase. Operators are learning how to create and scale successful projects and reaping the rewards of their technology investments across the enterprise.

Yet the rapid pace of change in the AI field means that there are always new challenges. How are senior oil and gas leaders adapting to the AI transformation? During an industry event, Swapnil Bhadauria, EY Americas Oil and Gas Digital Operations Leader, had the opportunity to discuss the evolving AI landscape with several industry leaders, with a special focus on harnessing the combined power of machine learning and generative AI (GenAI).

Value creation begins with understanding where a function’s pain points are and what an ideal ‘future state’ looks like as it relates to day-to-day activities.

Swapnil Bhadauria

EY Americas Oil and Gas Digital Operations Leader

Here are some highlights of that discussion, featuring three leading AI proponents from major oil and gas companies:

Q. How can machine learning and GenAI work together to optimize performance, reduce costs or drive efficiency in oil and gas operations?

Q: What are some key best practices in scaling solutions and keeping pace with the evolution of AI technology?

Q: What can we expect to see in the near term as oil and gas companies evolve along the AI journey?

Human-centered AI

As companies push the envelope with AI development and deployment, leaders in the field are recognizing that the human element is critical to acceptance and usage.

“Value creation begins with understanding where a function’s pain points are and what an ideal ‘future state’ looks like as it relates to day-to-day activities,” said Bhadauria. “And then it requires tools that can be easily adopted and customized to meet other needs. AI can’t exist in a vacuum — it must be both useful and useable to the people we support in business functions. The companies that prioritize end users’ needs and skill levels are the ones developing AI with high rates of acceptance that drive real value.”

EY teams help oil and gas companies build and implement practical, scalable AI platforms and tools that transform how they work — and thrive.

Key takeaways

Industry leaders agree that AI is reshaping oil and gas operations, but success depends on more than technology. Companies that prioritize user adoption and explain the future of AI in practical terms will lead the way in transforming workflows and driving real value.

Our latest thinking

The COO’s new agenda: Future-proofing oil & gas and chemicals assets

Leading COOs are reshaping operations by integrating technology, workforce and ecosystem partnerships to drive resilience, performance and value.

Driving oil and gas operations value through a robust data foundation strategy

The key for oil and gas companies to scaling advanced technology is developing an integrated data foundation. Learn more.

Building out the AI-assisted energy company: you can’t do it alone

Trusted collaborators and the AI ecosystem can help oil and gas companies keep pace with the rapid development of new technologies. Learn more.

Scaling AI for maximum impact in oil and gas

Moving from use cases to enterprise-wide AI in oil and gas is more than a technology challenge. It requires anchoring on value, feedback and innovation.